Wikipedia:Wikipedia Signpost/Single/2010-03-01

Wikipedia Reference Desk quality analyzed

In an article published recently in the Journal of Documentation,[1] library researcher Pnina Shachaf analyzed the quality of answers at the Wikipedia Reference desk. The reference desk serves as an open forum for visitors to ask questions about any topic not directly related to Wikipedia itself (the Help desk answers questions about the site); anyone is welcome to help answer questions. This paper is the first to study Wikipedia's reference desk. It found that Wikipedia volunteers performed as well or better than traditional library reference desk services on most quality measures, providing a similar level of service.

Shachaf's study analyzes the quality of Wikipedia Reference desk answers in the context of other, similar studies. A literature review is given of other studies of online question and answer boards, such as Yahoo! Answers, as well as a brief review of classic studies of the effectiveness of traditional library reference services.[2] Shachaf notes that collaborative Q&A sites are a new model for reference, and that research to examine their quality is still new and rarely takes account of findings from traditional reference research.

The study uses content analysis to analyze reference desk answers on three measures: reliability ("a response that is accurate, complete, and verifiable"); responsiveness ("promptness of response"); and assurance ("a courteous signed response that uses information sources") (pp. 982). These are based on a metric called the SERVQUAL measures that have been extensively used in other studies of library reference services. They also map to the basic guidelines that are given to question-answerers on the Wikipedia reference desk (for instance, to sign responses).

The data sample used was from April 2007, and analysis was done on 77 questions with a total of 357 responses, out of the 2,095 questions received in April 2007 (or an average of 299 transactions for each of the seven topical reference desks). Shachaf notes that "on average, the Wikipedia Reference Desk received 70 requests per day and users provided an average of 4.6 responses for each request" (pp 980). Shachaf first analyzed whether the questions were asked and answered by "experienced" (determined for the purposes of this study as an editor with a userpage) or "novice" (without a userpage) users, finding that 85% of answers were provided by "experienced" users.

Of the questions analyzed, Shachaf found that most questions were answered quickly (on average, the first response was given after four hours); that answers were signed with Wikipedia usernames; and that 92% of the questions given a partial or complete answer. 63% of the questions were answered completely. Of the factual questions where the coders were able to determine accuracy, it was found that 55% of the answers were accurate, 26% were not accurate, and in 18% of the cases, there was no consensus reached on the reference desk. 55% is comparable to studies of the accuracy of traditional one-on-one reference.[3]

The sources used in reference desk answers were also examined. The sources used in a sample of 210 interactions were analyzed; Wikipedia articles were referred to in 93% of these transactions and account for 44% of the references listed. Sources such as journals, databases, and books were very rarely used. This is a major difference from answers provided in traditional library reference services; librarians tend to use and cite sources, including traditional information sources such as journals and databases.

Shachaf compares these statistics to traditional library reference services. Overall, answers at the Wikipedia reference desk are comparable to library reference services in accuracy, responses are on average posted more quickly than emails to libraries are replied to, questions are answered more completely at the Wikipedia reference desk than via library virtual reference services, and thank-yous from question askers are received at the same rate. The conclusion is that "The quality of answers on the Wikipedia Reference Desk is similar to that of traditional reference service. Wikipedia volunteers outperformed librarians or performed at the same level on most quality measures" (pp. 989).

However, Shachaf cautions that these results are only achieved in the aggregate. Shachaf writes:

...while the amalgamated (group) answer on the Wikipedia Reference Desk was as good as a librarian's answer, an amateur did not answer at the same level as an expert librarian. Answering requests in this amateur manner creates a forest of mediocrity, and, at times, the "wisdom" of the crowd, not of individuals, reaches a higher level. For a user whose request received more than four answers, sorting out the best answer becomes a time consuming task. ... The quality of an individual message did not provide answers at the same level as individual librarians do, but an aggregated answer made it as accurate as a librarian's answer (pp.988–989).

Shachaf offers some ideas as to why the all-volunteer Wikipedia Reference Desk service might work as well as library reference services, including the possibilities that experienced question-answerers gain practice in answering reference questions similar to professional librarians; that the wiki itself is conducive to providing collaborative question-answering services (more so than most software used for library reference); that the type of questions being asked may differ from Wikipedia to libraries; and that (according to Shachaf, the most likely possibility) the collaborative aspects of the service, where answers can be expanded on, improved and discussed, helps improve answer quality. She concludes that more research is needed into the nature of online Q&A boards staffed by volunteers.

Notes

- ^ Shachaf, Pnina. (2009). "The paradox of expertise: is the Wikipedia Reference Desk as good as your library?." Journal of Documentation, v. 65 (6). pp. 977–996. [1]. Not available freely online.

- ^ Traditional library reference is understood in this context as a questioner interacting one-on-one with a professionally trained librarian, either in-person at a library reference desk, or via email/chat/phone.

- ^ Note that it is quite difficult to determine accuracy for most reference transactions, since answers may be partially accurate or have qualitative situational differences; 55% accuracy is a standard estimate based on studies of in-person reference desk interactions in the 1980s (citation to Hernon and McClure (1986) and later analyses given by Shachaf).

Reader comments

Usability, 15M articles, Vandalism research award, and more

Usability Project to become permanent

The Usability Project, initially funded by a grant from the Stanton Foundation, will be extended indefinitely as a "user experience" (UX) program. The current round of usability work is still in progress—including a much anticipated option to collapse confusing template markup while editing—but at the conclusion of the grant period Naoko Komura and Trevor Parscal will stay on as permanent Wikimedia staff. In the announcement on the Wikimedia blog, Erik Moeller said the move was prompted by the Wikimedia Foundation's recent fundraising success.

Global Wikipedias reach 15M articles

The article count across all Wikipedias has passed 15 million articles. This follows the milestone in which the Russian Wikipedia reached the half-million article mark.

Yahoo Research sponsors investigation into plagiarism and Wikipedia vandalism

The Pan 2010 Lab has published a call for participation on a project to detect online plagiarism and vandalism at Wikipedia. An award of 500 Euros will go to the winning entry. The lab is scheduled to be held as part of a conference to be held in September in Padua, Italy.

Briefly

- A new Wikimedia scorecard with statistics about visitors and traffic has been posted.

- The WikiSym Call for Papers is closing on 7 March.

- A call for proposals for the Wikimedia track at the Open Knowledge conference is now open.

- Right-to-left support is now enabled for the mobile Wikipedia interface.

This week in history

- 2005: Wikipedia has a power outage

- 2006: Trouble with office actions

- 2007: Daniel Brandt wheel war

- 2008: Brockhaus announces last print edition

- 2009: Books extension enabled

Reader comments

Idealizing the Star Spangled Banner, Curious Announcement, and more

American bias?

Kevin Myers wrote an Irish Independent opinion column on 25 February that tangentially accused Wikipedia's contributors of idealizing America's fortunes at the time the Star Spangled Banner was penned. Myers contends that the US essentially lost the War of 1812 to Canada, but "Wikipedia's US monitors" instantly correct "every attempt by Canadians to put it into the historical record." He provides no supporting evidence, but was likely referring to controversy at the War of 1812 article and an ensuing Mediation Cabal case, closed in Nov 2009. Your reporter finds no evidence that opinion strictly reflected the nationality of editors (nor of historians for that matter).

Curious Announcement

BBC Radio 4's comedy series The Museum of Curiosity announced its new co-host (or "curator") via Wikipedia on 26 February. Dan Schreiber, the co-creator and co-producer of the show, tweeted that the name could be found on the newcomer's Wikipedia page, and offered a prize to the discoverer. This edit was subsequently found to name Jon Richardson.

Briefly

- New York Times Upfront, a publication of Scholastic Corporation, on 8 February featured an article "Free Speech vs. Privacy" by John Schwartz about the conflict between different countries' free speech and privacy laws as they relate to content in Wikipedia articles. (see Signpost coverage of an earlier NYT article by Schwartz on the same legal case)

- The Wall Street Journal Classroom edition for students published a January cover article, "What's Wrong with Wikipedia?", documenting Wikipedia's sustainability challenges. It cited Felipe Ortega's 2009 research. (see extensive coverage on 23 November, 7 December, and 30 November of 2009)

Reader comments

WikiProject Severe Weather

WikiProject Severe weather was founded back on May 7, 2007, during an active time of severe weather in the United States (see May 2007 tornado outbreak). The project's core article is Severe weather. Early members included User:Runningonbrains, User:CrazyC83, User:WxGopher, and User:Evolauxia. The WikiProject had a period of high activity until 2009, when an unusually slow severe weather season most likely contributed to a decline in activity. Despite the low levels of activity, I managed to find a couple of editors for this interview.

1. First, tell us a bit about yourself and your involvement in WikiProject Severe Weather.

- I first became involved in 2005–06, after spinning off in general weather. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- I added myself to the project due to involvement in the general meteorology articles which overlapped the project. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

2. When did you first join WikiProject Severe Weather? What are some of the challenges that the project has met since you joined, and how were they dealt with?

- I developed it before the project. The challenges are keeping everything up to date and accurate given the high number of updates needed especially during peak season, and keeping up with major events. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- Soon after it started. The tornado and severe weather articles are those I've been most involved with in regards to collaboration with others. The severe weather article editing/improvement has been at a near standstill since last fall, due to a lack of agreement in the overall article structure and which sections may need to be removed. Therefore, my experience is mixed. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

3. What aspects of the project do you consider to be particularly successful? Has the project developed any unusual innovations, or uniquely adopted any common approaches?

- Probably the biggest innovation was profiling EVERY isolated event in the US. I started that in January 2009. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- The improvement of individual non-tropical storm-related articles are a success of this project and the non-tropical storms project. Before this project started, it was left up to me and one or two others to create these articles. Now that the two projects have gotten involved in individual storm-related articles, it frees me take a backseat and improve the meteorology-related articles. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

4. Have any major initiatives by the project ended unsuccessfully? What lessons have you learned from them?

- Not that I am aware of. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- The severe weather article, by far. The article collaboration improvement drive failed with that one. Since there's no apparent resolution, there is nothing to learn yet from that failure. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

5. What experiences have you had with the WikiProjects whose scopes overlap with yours, in particular the Tropical Cyclone, Meteorology, and Non-Tropical Storms WikiProjects? Has your project developed particularly close relationships with any other WikiProjects?

- I work with WP:TROP as well, but don't do a lot elsewhere. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- I've done much work with the tropical and met projects, mainly to the meteorology-centered articles. I have contributed to more individual hurricane/tropical cyclone articles than severe articles due to my background. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

6. What is WikiProject Severe Weather's plan of attack for this year's northern hemisphere severe weather season?

- Keep doing what has been done lately, and build from 2009. If changes are needed, we will know in the spring when things pick up. International activity needs more sources to build on, as the lack of reliable sources hurt such from becoming global. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- I have no idea. If it were up to me, improving the existing articles to at least GA class would be primary before starting on new articles. There's a tendency in all of the met-related projects to leave existing articles alone and rush to create new ones. If the severe weather project did the reverse, imagine the quality that would be present in the existing articles. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

7. What is your vision for the project? How do you see the project itself, as well as the articles within its scope, developing over the coming seasons?

- I see this becoming global, but we need more resources from elsewhere in the world. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- If the quality of the articles within the project improves, it will attract more editors all by itself. Success begets success. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

8. In connection with the question above, several of the editors on the WikiProject's member list seem to have gone inactive, and only four editors have signed themselves as active in the project since the beginning of 2009. Is the WikiProject running low on active members, and if so, is there anything you envision that could be done to help capture more active contributors?

- Well, the inactive 2009 season may have slowed things down, but we still get plenty of contributions from non-members. CrazyC83 (talk) 18:06, 24 February 2010 (UTC)

- This is true in all the met-related projects. A year or two ago, I would say a problem we had in the TC project was inadvertently scaring away new editors due to a fundamental lack of wikipedia-related knowledge on the part of new editors. As long as the severe weather project is welcoming in regards to new editors, and helpful towards steering them to the proper guidelines, it will grow naturally/organically, like the TC project did in 2006 and 2007. Thegreatdr (talk) 23:32, 26 February 2010 (UTC)

You'll want to grab a cup of coffee for next week's report. Until then, feel free to sit back, relax, and catch up on the previous editions of WikiProject Report in the archive.

Reader comments

Approved this week

Administrators

One editor was granted admin status via the Requests for Adminship process this week: The Wordsmith (nom).

Featured pages

Nine articles were promoted to featured status this week: City of Blinding Lights (nom), Star Trek III: The Search for Spock (nom), Cedric Howell (nom), Film noir (nom), Antonin Scalia (nom), Banksia sphaerocarpa (nom), Gray Mouse Lemur (nom), Tchaikovsky and the Belyayev circle (nom) and Death of Ian Tomlinson (nom).

Twelve lists were promoted to featured status this week: Laudian Professor of Arabic (nom), Grammy Award for Best Traditional Pop Vocal Album (nom), List of Sacramento Regional Transit light rail stations (nom), List of Memorial Cup champions (nom), List of Texas Longhorns head football coaches (nom), List of Braathens destinations (nom), Mayor of Jersey City (nom), List of Washington state symbols (nom), List of Bristol Rovers F.C. players (nom), List of battlecruisers of Germany (nom), List of international cricket centuries by Brian Lara (nom) and Timeline of the 2009 Atlantic hurricane season (nom).

No topics were promoted to featured status this week.

No portals were promoted to featured status this week.

The following featured articles were displayed on the Main Page as Today's featured article this week: Nikita Khrushchev, Pauline Fowler, Huntington's disease, Smallville (Season 1), Mendip Hills and Callisto.

Former featured pages

Two articles were delisted this week: Characters of Kingdom Hearts (nom) and Imperial Japanese Navy (nom).

No lists were delisted this week.

No topics were delisted this week.

No portals were delisted this week.

Featured media

The following featured pictures were displayed on the Main Page as picture of the day this week: Sketch of John Quincy Adams on his deathbed, Asci of a Morchella elata morel mushroom, Enrico Caruso, Ernst Haeckel's "Tree of Life", Queen Elizabeth II Bridge, Emerald cockroach wasp and Quartz crystal.

No featured sounds were promoted this week.

No featured pictures were demoted this week.

Eighteen pictures were promoted to featured status this week.

-

A man in Kabul selling the ingredients for goat Siri paya

-

Dakota Blue Richards as April Johnson in the 2008 film Dustbin Baby

-

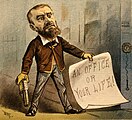

1881 political cartoon of Charles J. Guiteau

Reader comments

Arbitration Report

The Arbitration Committee neither closed nor opened any cases this week, leaving three cases open.

Open cases

- Asgardian (Week 2): An arbitration case opened to assess the behavior of Asgardian, including accusations of gaming the system to avoid community-based remedies and attempts at ownership of articles. Currently in the evidence/workshop phase.

- ChildofMidnight (Week 2): An arbitration case opened to assess the behavior of User:ChildofMidnight, including allegations that he has defamed editors and contributed to problems in highly controversial areas. Currently in the evidence/workshop phase.

- Transcendental Meditation movement (Week 2): An arbitration case opened to deal with circumstances on articles related to the eponymous movement similar to those that had surrounded Scientology pages the year prior. Currently in the evidence/workshop phase.

Reader comments

MediaWiki 1.16 approaching, Wikimedia Mobile, new MediaWiki video player progressing, user-agent madness

MediaWiki 1.16 approaching

There used to be a time that Wikipedia was always running the latest and greatest version of the MediaWiki software. In the last year however, this proved to be unmanageable and Wikipedia switched to a stable version, plus those fixes that are required to keep the websites running and of course the new work of the Usability project. For the past few months Tim Starling has been working hard to review all the latest code from MediaWiki, in order to release MediaWiki 1.16 and to deploy this for all the WikiMedia projects. Last week Tim announced that he had created a 1.16 branch. That basically means that Tim has almost caught up, and that no new features that are developed at this point will make it into the next version of the software. Only bugfixes will be allowed from now on. The new version of MediaWiki should be deployed in the next few weeks. (Wikitech-l mailing list announcement)

Wikimedia Mobile

The Mobile version of the Wikipedia websites is slowly progressing. In the past weeks support for language variants for the Serbian and Chinese languages was added, and languages that are written right-to-left were finally properly supported.[1] The translatewiki.net community has been hard at work with translating the software in various languages and the software is almost fully translated for 69 languages now.[2] If you want to help your language, read the instructions on Meta.

New MediaWiki video player is progressing

In a blog post, Wikipedia editor TheDJ [who is also the Technology report author for this week] writes about his experience using the new HTML5 video player that has been in development. The video player has recently gained fullscreen support, and allows for subtitles to be added to videos. The work is a donation by the company Kaltura who are lending their developer Michael Dale to work on the project. The player can be easily tested with this test video, or by enabling the mwEmbed Gadget in your preferences.

-

Initial view of the player

-

Selection menu of subtitles

-

Subtitles beneath the video

User-agent madness

On 16 February, Domas Mituzas, who is one of the primary volunteer system administrators of Wikimedia, announced that from now on all software that accesses the Wikimedia websites will be required to provide a user-agent. This action was taken without announcement and initially broke some software. Breakages of Google's Translator Toolkit and Apple's Dictionary application were noted and quickly resolved. The change did draw a lot of response from people who did not want their browsers identified when browsing the website, but the consensus among the system administrators was that the sacrifice was worth the benefits for a website maintained by volunteers and funded by donations.

The reason for the change was the incredible amount of resources that were being consumed by automated tools, with the sole purpose of using Wikimedia information for spam purposes. The hope is that by forcing everyone to use a user-agent, it will become easier to identify users with good intentions. The system administrators intend to be tougher in the future in dealing with problematic website usage, and being able to identify people with good intentions should prevent them from becoming unintentional victims. The block saw an immediate effect in resource usage as can be seen in last months graphs (search servers, application servers).

Bots approved

The following bots and tasks have been approved in the past three weeks:

- FaleBot – Interwiki bot

- EleferenBot – Interwiki bot

- FrescoBot (Task 2) – Corrects common errors in writing of external, internal and wikilinks

- TedderBot (Task 2) – Bot that can change the name of templates in transclusions.

- Yobot (Task 12) – Adds references to "Name and History" sections of articles about Ohio townships

References and notes

Reader comments