Wikipedia talk:Modelling Wikipedia's growth/Archive 3

| This is an archive of past discussions on Wikipedia:Modelling Wikipedia's growth. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 | Archive 2 | Archive 3 | Archive 4 |

Logistic growth model

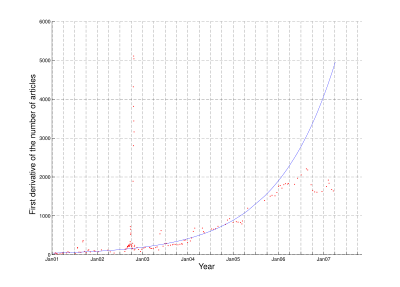

Just after en.wikipedia reached 1 million articles I predicted here that there is a maximum in articles in the form of a cumulative Gauss (or logistic function). In less than a year the top of articlegrowth is now probably reached. The 2nd derivative is now convingingly below the x-axis, the indication that the top of article growth is reached.

My model which still holds for about a year and has proven to be quite predictive:

- The top of articlegrowth is now (end of February 2007) probably reached, at about 60000 new articles a month.

- The maximum of articles on en.wikipedia will be about 4 million articles.

- This maximum will be reached in 2013

Could anyone doublecheck my model. HenkvD 20:34, 28 February 2007 (UTC)

- I find your model interesting, and perhaps more believable from a real-world perspective than the purely exponential models proposed hitherto. I'm not really a mathematician though, so I wouldn't presume to be able to verify it. Torgo 06:39, 16 March 2007 (UTC)

It should be noted that the cumulative Gaussian function is NOT the same as the logistic function, although there is certainly a similarity in gross visual appearance of the two graphs. Michael Hardy 19:59, 6 April 2007 (UTC)

- I use the cumulative Gaussian function, but maybe the logistic function would be more appropriate. HenkvD 21:13, 6 April 2007 (UTC)

- The only way to know which model should we use is to find a logistic curve that fits the data points. I tried to do a least squares fitting the ones on logistic function) but I could not get a nice result. I also doubt about the Gaussian CDF is a good model for that logistic. Diego Torquemada 14:01, 7 April 2007 (UTC)

Henk,

Your model is based on the assumption that the growth rate follows a Gaussian curve. I doubt whether that is true. Maybe on the very long run, but I think the history of Wikipedia is too short yet to draw such conclusion.

What we know is the total number of articles in history. Plotting that on a logarithmic scale gives a more or less straight line. To draw any conclusions on the first, i.e. growth per time unit, (or even the second) derivative makes the model very sensitive for any noise in the basic measurement. Noise that could be introduced by a variety of factors such as season, media attention or even other new phenomena on the internet.

The exponential growth can be understood because of the following:

- Growth of contributors and hence the growing variety of interests / subjects. But probably more important:

- A shift of the threshold of what is considered encyclopedic. That for sure is moving downward, resulting in more and more articles. It will be hard to quantify this kind of effects.

But there are other statistical phenomena for which there is no good explanation. Compare the growth of some of the major languages (see graph). Why is de: growing not so fast (quality awareness??) and why is fr: growing faster than anyone else? For the dip in nl:, I've an explanation. The bots generating new articles for e.g. communities in foreign counties were slowed down when approaching the 250.000 article.

A few other observations for which I don't have an explanation yet:

- There seems no good correlation between the number of articles and the size of the population speaking that language.

- Let's start with nl:. Roughly 22 million people speaking Dutch and 280k articles. So a ratio of 80 : 1

- But for de: 100 million German speaking people (DE, CH and AT) and 460k articles gives a ratio of 220:1

- The French: 70+ million (FR and CA, I don't think the old colonies of FR should be added) and 460k gives 150:1

- The English is of course more difficult. Let's take 400 million (US and UK plus 60M for other?? and leaving out IN) and 1.7M articles gives a ratio of 240 : 1

The ratio's differ by a factor 3, for which I only have cultural differences as a possible explanation.

- Agreed. For example Spanish is the second most important language of the world (380 millon speakers), but the spanish wikipedia does not have so many articles because the people in latin america does not have so much education as the europeans, and they also do not have the same easiness of access to the internet. Diego Torquemada 14:01, 7 April 2007 (UTC)

- One would expect that there is some correlation between the size of the Wikipedia and the number of edits per day. Many new articles would require more edits to become mature.

That also turns out not to be true. See tables in [1] for basic numbers (article is about an entirely different subject!). For nl: 1 edit per article every 18 days, for en: 1 edit per article every 7-8 days.

A correlation of the number of edits with the number of native speakers of a language cannot be found too.

My conclusion is therefore that we are not yet able to fully understand the statistics, but the other languages can learn form en: one thing: there is ample room for growth for the time being. - Rgds RonaldB-nl 00:20, 7 April 2007 (UTC)

- Ronald you mention

- Your model is based on the assumption that the growth rate follows a Gaussian curve. I doubt whether that is true. The orange lines are the actual lines, irrespective of the model. The grey lines are the model of the Gaussian curve. Especially on the 2nd derivative, but alos on the growth graph it can be seen that the actual fits the model quite well.

- "Look at my nice graph" is no statistical argument. You can fit any formula to given data but the question is how good is you fit? You need Statistical hypothesis testing and get a Confidence interval to answer this. -- Nichtich 18:44, 10 April 2007 (UTC)

- What we know is the total number of articles in history. Plotting that on a logarithmic scale gives a more or less straight line. See Image:EnwikipediaExpOrLogistic.PNG or Image:Wikigrow log.svg. This shows this is not really a straight line anymore.

- This can also be proven but in this case it's obvious. Welcome to sub-exponential growth en:, hope to see continuing growth in quality! -- Nichtich 18:44, 10 April 2007 (UTC)

- A shift of the threshold of what is considered encyclopedic. That for sure is moving downward, resulting in more and more articles. It will be hard to quantify this kind of effects.. Despite the shift of the threshold the growth seems to be at a maximum, at least that is what I see in the growth curve. Because there are so many contributors with many areas of expertise contributing over a long time the data gives a unique opportunity to predict the future developments. HenkvD 10:03, 7 April 2007 (UTC)

- Well, you can guess but predicting? No. Growth is affected by so many factors (bandwith, software, press coverage, community guidelines etc.). This is still a research question but drawing diagrams will not be enough to answer. -- Nichtich 18:44, 10 April 2007 (UTC)

Sorry for my english I think the idea to use the logist at the place of the exponential is a good idea, because the exponential say "allways more, without end" and the logistic say "in the future it will be 'enough is enough'". But my personal idea of "prediction" in a envirronnement that change is very negative, indipendent of the choice of the model. Tomi 20:36, 1 May 2007 (UTC)

We will see :-)

Translation in a better german: Ich glaube, dass die logistic-Kurve der exponentielle vorzuziehen ist, denn die exponentielle hat im Modell ein Wachstum das niemals enden wird (was wohl meiner Meinung nach nicht glaubwuerdig ist). Das Logistic-Modell geht aber davon aus, dass (aus welchen bekannten oder unbekannten Gruenden auch immer) es zu einer Verlangsamung bis zu einem Stillstand des Wachstums kommen wird, was ich recht glaubwuerdig finde. Ab gesehen davon, halte ich persoenlich nicht viel von Prognosen/Projectionen in einer dauernd sich aendernden Umwelt. Tomi 20:36, 1 May 2007 (UTC)

Agree not exponential anymore

I agree that the growth of wikipedia is now not exponential anymore... sometime around Ago, 2006 was the maximal growth rate of wikipedia (and also the inflection point) ... The graph of the first derivative reveals that the growth may follow some logistic trend... it seems also that the article 2'000.000 will be at the end of this year.

The regression curve is with a=0.00208684458094 and b=9.91167349817703. This regression was calculated using data from Jan 2003 to Dec 2005 Diego Torquemada 05:51, 7 April 2007 (UTC)

I had seen this talk here. It didn't seem to be improving the article so I decided to be bold and make a change to the article. It is just a suggestion of course (as are the black explanations on the graph). What do you think? crandles 12:52, 14 April 2007 (UTC)

Later Logistic growth model

Article has had a new graph and

This curve is asymtopic to 2,526,451, impying that the growth in the number of articles will become negligable once the article count passes the 2.5m mark. The best-fit line and the data have an R-squared value of over 0.999 (for values after Jan 2003). The curve hits 2 million articles around 11th/12th October 2007.

The logistic growth model is based on:

- more content leads to more traffic, which in turn leads to more new content

- however, more content also leads to less potential content, and hence less new content

- The limit is the combined expertise of the possible participants.

This is a lot more reasonable than talking about a size of 0 by 2013. However is it reasonable to suggest there is a limit to 'the combined expertise of the possible participants' in this way? When 2,525,000 articles is reached does this mean there are no new events waranting wikipedia articles? Maybe not, it could mean that there are articles that were once considered notable but are now no longer notable and deleted and these are are identified at the same rate as new articles are needed. That is possible, but it doesn't seem likely to me. More likely is continued growth at a rate which reduces towards some positive rate. This would occur as we approach the combined expertise of the possible participants about events earlier than say 2000 but new event would continue to cause growth. (Well either that or a sudden collapse of wikipedia as people come to their senses and realise that what they are doing is just wasting their time seems more likely to me.) crandles 14:27, 5 August 2007 (UTC)

The other thing I wanted to say is, Is this in the right place? Should we put all the models of number of pages in the top section? Perhaps also a tidy up by archiving old modelling attempts and predictions? crandles 14:28, 5 August 2007 (UTC)

- Feel free to tidy up the page. I agree that in the future persons, places, etc. that are in the news will probably continue to generate new articles. If that would be 1000 articles a week, 50,000 a year, it would be a small growth of 2% a year. The important issue is that in the future the growth will most likely be much smaller than currently. The logistic model seems the best model for the moment. HenkvD 21:33, 5 August 2007 (UTC)

- The important thing to remember is that this is just a model. My gut feeling is that there is a limit to the number of articles because there is a limit to the combined knowledge of potential contributors. Further growth will come about from either new topics, or increased expertise from general internet populance. Also, this article focuses almost entirely on the number of articles. It would be interesting to model database size (and hence average article size), which shoudl continue to grow long after article count growth becomes negigble. Tompw (talk) (review) 20:48, 6 August 2007 (UTC)

- No, I don't think it's a knowledge limit per se we're still seeing a lot of intra-article growth, just not so many new articles, I think the reason we're seeing decay on the number of new articles, is that the old articles take up volume in the knowledge space. I mean, there's a finite amount of human knowledge which the human race in total has, and of that, there's a subset that we may call 'wikipedia encyclopedic' (according to whatever definition we decide to use). You can imagine the knowledge space it as a circular area, some people know a very large amount about a very small region of this, others know a small amount about a large region etc. etc. Really, it's a Venn diagram.- (User) WolfKeeper (Talk) 18:43, 2 February 2008 (UTC)

- The thing is, when people create an article on a particular 'topic' they take up a oddly shaped region in the 'wikipedia knowledge space' Venn diagram. Some are very big regions, others are very small. And there are frequently big overlap, and the articles can be entirely within the scope of another (for example turbojet is a subset of jet engine). But as knowledge gets added to the wikipedia, it can either go in one (or more) of the existing articles or it can force the creation of a new article, because no extant article covers it that the editor can find, that's the primary driver on creation of articles. But the number of articles will saturate- the topics they cover will 'fairly soon' tend to cover all of knowledge space (although the actual content won't cover everything within that space, ever), and so creation slows, which is what we are seeing right now. And it's only when you spot a gap in-between the existing articles topics that a new article can be made, but that gets rarer and rarer. So you would expect a decay over time in the rate of appearance of new articles.- (User) WolfKeeper (Talk) 18:39, 2 February 2008 (UTC)

- There is a second effect I've noticed though- splitting. As articles fill with knowledge, they eventually tend to become unwieldy; and subset, smaller scoped, articles split off, so that's going to help the article growth carry on, and I think that may violate some of the assumptions of logistic or exponential decay curves- the evidence is that article growth is fairly constant right now, and that will eventually start to hit the limits of about 100K and impact the article creation again- but that's a much slower effect I think. I think that too eventually slows though, the article itself is a knowledge space, and eventually people will find it harder to add something that isn't already there in the article or a related article.- (User) WolfKeeper (Talk) 18:39, 2 February 2008 (UTC)

Red link statistics

I've heard some people say that growth is slowing because we've run out of articles to write. Is the number of red links decreasing, either on an absolute or relative basis? Andjam (talk) 00:00, 17 December 2007 (UTC)

- Here User:Piotrus/Wikipedia interwiki and specialized knowledge test is a ballpark calculation of Wikipedia potential: 400 millions of articles. Pavel Vozenilek (talk) 19:19, 24 December 2007 (UTC)

- I think that most of these 400 million articles will probably not be judged encyclopedic and get deleted. I think that we're deliberately/implicitly limiting ourselves to reasonably general topics by being 'encyclopedic'. Most of those 400 million articles would presumably be about 200 times of less general importance, and hence are likely to fail to stick.- (User) WolfKeeper (Talk) 12:30, 1 February 2008 (UTC)

- I think that would depend mightily upon what you would adjudicate as 'encyclopedic.' - Arcayne (cast a spell) 06:28, 2 February 2008 (UTC)

- I think that most of these 400 million articles will probably not be judged encyclopedic and get deleted. I think that we're deliberately/implicitly limiting ourselves to reasonably general topics by being 'encyclopedic'. Most of those 400 million articles would presumably be about 200 times of less general importance, and hence are likely to fail to stick.- (User) WolfKeeper (Talk) 12:30, 1 February 2008 (UTC)

The 400m estimate was based considering what topics are considered encyclopedic (if fringe). Do note I am still periodically updating that essay, monitoring trends.--Piotr Konieczny aka Prokonsul Piotrus| talk 21:56, 28 September 2008 (UTC)

TLC needed

This page seems to be in need of some attention. The logistic model stuff is very nice, but the rest appears to be old unmaintained speculation and is, quite frankly, something of an embarrassment. I've tried to clean up the worst of it, and have created two up-to-date graphs of article growth data, but there is more to be done. Geometry guy 18:53, 2 February 2008 (UTC)

Retrofit year topic subheaders

15-Feb-2008: To help keep old issues in perspective, I have retrofit year headers (such as "Topics from 2003"), as in other talk-pages, to avoid rehashing very old issues, while also not archiving the important old decisions. Replies can still be added anywhere, with the understanding that some topics were settled years ago. Topics had already been entered in date order, so no topics were moved, but some blank lines were omitted. -Wikid77 (talk) 13:49, 15 February 2008 (UTC)

Banning Anonymous IPs from creating new pages

Have you considered the effect of banning Anonymous IPs from creating new pages in January 2006 in the model? -Halo (talk) 18:24, 3 April 2008 (UTC)

- Actually has anyone looked at the actual size of Wikipedia? Number of articles would not necessarily correlate to size. Some articles get deleted, some grow larger from editing, and then there are some new ... Is there a way to get a graph or raw data of hard drive space needed to support one singe copy of Wikipedia since its beginning? There are also unfinished red links. Are these included in the stats as links? If they are counted as an article then this could effect the correlation. Articles consuming minimal space (and minimal time) are assumed to have the same average "time value" as those which consume many MBs when a noncontinuous variable such as a count is used. Also, as the expansion of the website grows, the number of new articles should decrease/increase as it approaches the rate we are discovering/recording information from research today. This would not be a zero rate. Andrew Soehnlen (talk) 04:01, 10 August 2008 (UTC)

Growth rate slowing because of editor behavior towards new editors and articles?

The Economist magazine surmised that the reason that Wikipedia's growth rate has slowed:

- [N]ovices can quickly get lost in Wikipedia's Kafkaesque bureaucracy. According to one estimate from 2006, entries about governance and editorial policies are one of the fastest-growing areas of the site and represent around one-quarter of its content...The proliferation of rules, and the fact that select Wikipedians have learnt how to handle them to win arguments, now represents a danger...inclusionists worry that this deters people from contributing to Wikipedia, and that the welcoming environment of Wikipedia's early days is giving way to hostility and infighting.

- There is already some evidence that the growth rate of Wikipedia's article-base is slowing. Unofficial data from October 2007 suggests that users' activity on the site is falling, when measured by the number of times an article is edited and the number of edits per month. The official figures have not been gathered and made public for almost a year, perhaps because they reveal some unpleasant truths about Wikipedia's health. --The battle for Wikipedia's soul The Economist March 6th 2008:

Have their been any academic studies explaining this slowing rate of growth? travb (talk) 21:30, 27 December 2008 (UTC)

Added user page

I added this user page: User:Dragons flight/Log analysis to the see also section. travb (talk) 21:39, 27 December 2008 (UTC)

| This is an archive of past discussions on Wikipedia:Modelling Wikipedia's growth. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 | Archive 2 | Archive 3 | Archive 4 |