User:Wvbailey/Propositional formula

Informal development: propositional formulas

[edit]| Arithmetic concept | Arithmetic symbol | Propositional (sentential-logic) connective | Logic symbols | Alternate logic symbols | Venn diagram concept | Example: Boolean arithmetic | Example: Propositional evaluations |

| sum | + | OR | V | disjunction | (0+0) = 0, (0+1) = 1, (1+0) = 0, (1+1) = 1 | (0 V 0) = 0, (0 V 1) = 1, (1 V 0) = 1, (1 V 1) = 1 | |

| product | ∙ | AND | ⋀ | & | conjunction | (0∙0) = 0, (0∙1) = 0, (1∙0) = 0, (1∙1) = 1 | (0 ⋀ 0) = 0, (0 ⋀ 1) = 0, (1 ⋀ 0) = 1, (1 ⋀ 1) = 1 |

| difference | 1- | NOT | ¬ | ~ | negation | (1 -(1))=0, (1- (0)) =1 | ¬(1)=0, ¬(0)=1 |

The "laws" of propositional calculus: The distributive law, associative law, and commutative laws apply with one MAJOR exception: a distributive law exists for + over ∙ (!).

| Algebraic laws | Algebra formulas | Boolean formulas | Propositional formulas | |

| Commutation | ( a + b ) = ( b + a ) | ( a + b ) = ( b + a ) | ( a V b ) = ( b V a ) | |

| Commutation | ( a ∙ b ) = ( b ∙ a ) | ( a ∙ b ) = ( b ∙ a ) | ( a & b ) = ( b & a ) | |

| Association | ((a+b)+c) =( (a+(b+c)) | ((a+b)+c) =( (a+(b+c)) | ((a V b) V c) = (a V (b V c) ) | |

| Association | ( (a∙b)∙c) = (a∙(b∙c) ) | ( (a∙b)∙c) = (a∙(b∙c) ) | ((a & b) & c) = (a & (b & c) ) | |

| a+(b∙c) = (a+b) ∙ (a+c) | a V (b & c) = (a V b) & (a V c) | Distribution of OR over AND | ||

| Distribution | a∙(b+c) = (a∙b) + (a∙c) | a∙(b+c) = (a∙b) + (a∙c) | a & (b V c) = (a & b) V (a & c) |

Other common connectives: The other connectives are usually defined in terms of the three simple connectives NOT, OR, AND:

- IMPLY: ((~(a) V (a)) ≡ ((a) → (b))

- BICONDITIONAL or LOGICAL EQUIVALENCE: (a ←→ b) ≡ ( (a & b) V ( ~(a) & ~(b) )

Logical implication does not behave according to the commutative or distributive, associative or rules listed above.

Synthesis and Analysis: One process for synthesis creates a propositional formula from a truth table that is laid down according to a specification. Another approach uses following "theorems" and the notion of substitution to create as complex a formula as desired (cf McClusky 1965:85):

- (x V 0) = x (Identity)

- (x & 1) = x (Identity)

- (x & ~(x)) = 0 (Complements)

- (x V ~(x)) = 1 (Complements, (x → x) )

- ~(~(x)) = x (Involution, double negation)

- Example: Start with proposition "a". Equate (a V 0) = a. Substitute (b & ~(b))=0 for 0: (a V (b & ~(b)))=a. Distribute "a V" across (b & ~(b)): (a V b) & (a V ~(b) ) = a. Observe that (a V ~(b)) = (~(b) V a) ≡ (b → a). Likewise observe that (a V b) = (b V a) and b = ~(~(b)) so (b V a) = (~(~b)) V a) ≡ (~(b) → a). Put both of these back into the equivalence: (~(b) → a) & (b → a) = a.

| = a | |||||||||||

| b | a | ( ( ( ~ | (b) | → | a) | & | (b | → | a) ) | = | a |

| 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 |

| 0 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 |

| 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

Quite often, if a particular place in the table is unimportant the table's designer uses a third symbol "d" ("don't care") for added flexibility.

Analysis is the opposite process whereby, when given a propositional formula, the truth table and perhaps a Karnaugh map are created from the table. The above theorems also

Reduction: Both synthesis and analysis quite often lead to reduction or simplification of the formula, or its conversion to what is known as disjunctive normal form ( or less commonly, conjunctive normal form. A number of tools (methods, algorithms) are available to do this.

Besides the theorems above, The single most important of DeMorgan's theorem is the following (cf McClusky p. 87, PM p. 119-120):

- (a & b & ... & z) = ~(~a V ~b V ... V ~z)

- (a V b V ... V z) = ~(~a & ~b & ... & ~z)

Formal development

[edit]The topic presupposes a philosophy of the nature of sensations of objectsand and their being (i.e. ontology ). The synthesis (creation) and analysis of propositional formulas appear in philosophy, mathematics, and electrical engineering. Contributions to the art have come from all three disciplines.

Propositions

[edit]For the purposes of the propositional calculus, propositions (sentences, assertions) are considered to be either simple or compound.

Simple sentences are declarative in nature, that is, they make assertions about the condition or nature of a particular object of sensation e.g. "This cow is blue", "There's a coyote!" ["That coyote IS over there.]". [1]. Thus the simple "primitive" assertions must be about specific objects or specific states of mind. Each must have at least a noun (an immediate object of thought or observation), a verb (in the active voice, present tense preferred), and perhaps an adjective or adverb. "Dog!" probably implies "I see a dog" but should be rejected as too ambiguous.

- Example: "This sentence is simple"; "This squirrel is dead"; "This dog runs", "That cow is blue", "Switch M31 is closed", "This cap is off", "Tomorrow is Friday", "This statement is false", etc.

A compound sentence is a series of simple sentences joined (concatenated) by what are called sentential connectives, the common ones being OR, AND, and IF ..., THEN. BUT is treated the same as AND, and OR and AND sometimes are confused so care is required. A compound sentence can usually be reworded into a series of simple sentences, although the result will probably sound stilted.

- Example: "This sentence is either compound or simple, but indeed it is a run-on sentence." Rephrased before breaking into simple sentences this could be written: "This sentence is compound OR this sentence is simple AND this sentence is a run-on sentence." Broken into its simple sentences it is "This sentence is compound" OR "This sentence is simple" AND "This sentence is a run-on sentence."

NEGATION: In all cases a propositional system must, either directly or indirectly, include a means to express NEGATION. As a connective NEGATION occurs as NOR, i.e. in the phrase "NEITHER cold [stops me] NOR heat stops me." The NOT-AND or NAND has no usage in English but appears indirectly as NEITHER... NOR.... .[2]

"NOT" is unlike the other connectives. In a sense it is a degenerative binary operator. It derives from Aristotle's law of contradiction: Our intuition (that is, our knowing based upon repeated observation and inductive reasoning ) is unwilling to accept simultaneous assertions of BEING (Cow!) and NOT-BEING (Not-cow!), or assertions of QUALITY (Blue cow!) and NOT-QUALITY (Not-blue cow!). When applied to the same object simultaneously in place and time we say these paired assertions { BEING, NOT-BEING } and { QUALITY, NOT-QUALITY } are contradictory or inconsistent. Russell (1912) believed, in the manner of the rationalists, this knowing about contradiction to be "self-evident" i.e. derived from a priori (intrinsic, built-in) awareness; the empiricists such as Locke and Hume disagreed saying it is derived from experience.

- Example: If this morning I look out my window and observe something in my field and utter: "That cow is blue!", then I am not allowed (within the boundaries of sanity) to go on in the same breath and assert: "That cow is not blue!". Similar difficulties arrise if I assert "That cow is blue", but my wife asserts: "That cow is not blue!".

If for some reason, such as the consequence of an analysis or investigation, both assertions about an object exist in a place and time, then the observations are said to be inconsistent.

- Example: My assertion: "That cow is blue" and my wife's assertion "That cow is not blue!" are inconsistent assertions.

Assignment of variables for simple sentences: Each unique simple sentence (proposition, assertion) is assigned a unique symbol called a variable. If a simple sentence appears more than once in a compound sentence it is always assigned the same variable.

- Example: "If I have some food and it's noon and it's not raining, then we are going on a picnic; but if it is raining then we will eat at Fishy's."

"I have some food" = f; "It's noon" = n; "It is raining" = r, "We are going on a picnic" = p, "We will eat at Fishy's." = h.

- Example: "If the motor's over-temp switch "OTM" is closed and start button "PB1" is pushed, OR if the motor's over-temp switch "OTM" is open and the emergency-start botton "ES" is held in, THEN the motor "M" starts."

"OTM is closed"; "PB1 is pushed"; "OTM is NOT-closed [i.e. open]"; "ES is held in [i.e. active]";

Sentential (propositional) connectives: The variables that stand in place of the sentences are linked by their sentential connectives (also called logical connectives, operators, logic gates). The connectives derive their intuitive meanings from their use in common language. The most common ones are NOT (IT'S NOT THE CASE THAT ...), OR (inclusive-or: "a" OR "b" or both simultaneously), AND (also "but"), and IF ... THEN ... ("a IMPLIES b").

Mathematical logic adds EQUIVALENCE ("a" IF AND ONLY IF "b", "a" IFF "b", "a" IS NECESSARY AND SUFFICIENT FOR "b").

Electrical engineering creates specific operator-symbols for NOT, AND, and OR, NOR (OR followed by NOT), NAND (AND followed by NOT; also called "the stroke" | ), XOR (eXclusive-OR: a or b but not both, "not-equivalent", "addition without carry"), and XNOR (EQUIVALENCE).

Computer science adds the CASE operator also used by engineering (IF-THEN-ELSE: ( ("c" AND "a") OR ("NOT c" AND "b") ).

Algebra of propositions and their connectives: Once a "proposition" (sentence) has been converted into a propositional formula, the formula can be manipulated in an algebra not too different from arithmetic. An algebra (i) generalizes formulas by use of variables to represent constants (numbers), (ii) presents rules for creating formulas from formulas, (iii) presents rules for simplifying formulas, (iv) presents rules for the evaluation of a formula.

The propositional algebra closely resembles an arithmetic that starts with three rules symbolized by OR, AND, and NEGATE [NOT], variables (e.g. a, b, c ... x, y, z,...), two constants 0, 1, the equate-symbol =, and parentheses ( , ) . But unlike conventional arithmetic, only one of two values are assigned to any variable and to the outcome of an operation[ref> Engineering sometimes uses the + and ∙ when no confusion can result.</ref>. Because only 0 or 1 can result from an operation, there is no "carry" for addition. So a choice has to be made: either 1 + 1 = 1 (OR) or 1 + 1 = 0 (exclusive-or, XOR).

AND, OR: At least one ternary relation ((a, b), c) is required if we are to combine two propositions, each with their own truth or falsity, into the single assertion with a single truth or falsity.[3] As noted above, as algebraic operators these both behave according to the commutative law, i.e. (&(f, p), e) = (&(p, f), e).

- Example: "I have food" AND "I am picnicking." f = "I have food", p = "I am picnicking": (f & p) = e. Given this conjunction of events I am probably eating "e".

- Example: "I have food" OR "I am picnicking." This is ambiguous as to exactly what is happening. I may be walking down the street with a armful of groceries, OR I may be picnicking with a friend who's brought the food, OR I have my food spread out on my picnic blanket.

IF ... THEN ...: This operator does not behave according to the commutative law. Thus (→(f, p), e) ≠ (→(p, f), e). In spoken language it also contributes a sense of causality.

- Example: IF "I have food" THEN "I am picnicking": (f → p) = e

Common usage expects that, at least intuitively, "my having food" is a causitive factor in my "going-on-a-picnic" behavior. But in the propositional logic this is not the case: "IF "f" THEN "p" " is just formally defined as NOT-"f" OR "p" (~(f) V p ). As this is not common English speech the use of this operator can be confusing. It is not used in electrical engineering.

- Example: "It's not the case that 'I have food'" OR "I am picnicking" is considered logically equivalent to "IF 'I have food' THEN 'I am picnicking.'"

- (~(f) V p) ≡ (f → p)

Logical EQUIVALENCE: Logical equivalence is not identity. In the following example, "picnicking" is not identical to "having food"; "having food" is identical to "having food". But the two propositions are rendered "equivalent" by the conjunction (the AND) of formula ( f → p ) with its converse formula ( p → f ). In the following the first formula (f → p) is called the NECESSARY condition, and the converse ( p → f ) is called the SUFFICIENT condition. When conjoined by AND they are said to be NECESSARY and SUFFICIENT and thus EQUIVALENT. If one jokes that "Having a basket full of bread, wine and cheese is same as goin' on a picnic" they are asserting the (effective) equivalence of the two simple sentences.

- Example: (IF "I have food" THEN "I am picnicking") & (IF "I am picnicking" THEN "I have food").

- ( ( f → p ) & ( p → f ) ) ≡ ( f = p )

IF ... THEN ... ELSE: This operator appears in computer science as the simplest CASE operator and in electrical engineering as the AND-OR-SELECT operator. Although it sounds like two implications it is not.

- Example: IF "I have food" THEN "I am going on a picnic" ELSE (otherwise) "I am taking a nap." = ( "I have food" AND "I am going on a picnic" ) OR ( "Its not the case that 'I have food'" AND "I am taking a nap." )

- "I have food" = f, "I am going on a picnic" = p, "I am taking a nap" = n:

- ("f" AND "p") OR ( NOT-"f" AND "n"): ( (f & p) V (~(f) & a) )

Formal development of propositional formulas

[edit]There are at least two possible approaches to the development of a propositional calculus: (1) The notion of substitution together with the definition of the connectives (operators) by use of truth tables, or (ii) A formal axiomatic system (cf Tarski 1941:146-147).

System based on truth tables

[edit]

Truth table definitions: The following are to be regarded as definitions. As only 16 tables (24) are possible, the choice of symbolization is necessarily limited.

| variable | variable | NOT | NOT | AND | OR | variable | variable | NOT | NOT | AND | OR | variable | variable | NOT | NOT | AND | OR | ||

| b | a | ~(b) | ~(a) | (b & a) | (b V a) | b | a | ~(b) | ~(a) | (b & a) | (b V a) | b | a | ~(b) | ~(a) | (b & a) | (b V a) | ||

| ※ | ※ | ℥ | ℥ | ※ | ※ | 0 | 0 | 1 | 1 | 0 | 0 | F | F | T | T | F | F | ||

| ※ | ℥ | ℥ | ※ | ※ | ℥ | 0 | 1 | 1 | 0 | 0 | 1 | F | T | T | F | F | T | ||

| ℥ | ※ | ※ | ℥ | ※ | ℥ | 1 | 0 | 0 | 1 | 0 | 1 | T | F | F | T | F | T | ||

| ℥ | ℥ | ※ | ※ | ℥ | ℥ | 1 | 1 | 0 | 0 | 1 | 1 | T | T | F | F | T | T |

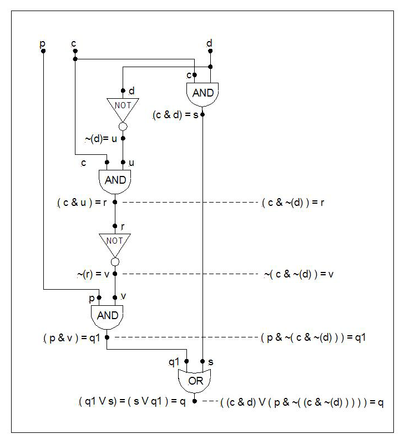

Engineering-symbol formation rules: A typical treatment used by engineers employs diagrams made from one-input NOT (inverter) symbols, and 2 input AND, OR, NOT, NOR, XOR and AND-OR-SELECT operator-symbols. These are linked by lines to indicate substitution. The recommended procedure is to start at the top or right with a labelling of the input-variables. Assign variable names to each operator's output (e.g. u, v, w in the drawing). Start at the top and connect the inputs to their operators. Connect the outputs of the operators to operators further down the line. Make appropriate substitutions starting at the top to derive the final formula(s) at the bottom (e.g. q in the drawing).

Evaluation of formulas: Any two pairings of symbols is possible, for example: { ℥, ※ } , { T, F }, { 1, 0 ), { ON, OFF }, { |, O, }, { open, closed }, { up, down }, { +5 volts, 0 volts } etc. The usual process is to "map" (put into 1-1 correspondence) definitions such as { door_open = +5 volts, door_closed = 0 volts } to { 1, 0 }.

- Caution is advised. It is quite common to reverse the "sense" of the values even in the same system. For example, switches come in two varieties: normally-open and normally-closed. So a system of two switches might invert the evaluation of one of the switches based upon behavior in the circuit: { { switch_#1_active = +5 volts at node B17, switch_#1_inactive = 0 volts node B17, }, { switch_#2_active = 0 volts at node B18, switch_#2_inactive = +5 volts node B18 } }. Also, { T, F } should be reserved for abbreviations having to do with "Truth" and "Falsehood".

Axiomatic system

[edit]The following formal axiomatization follows a treatment in Suppes 1957. It provides all the necessary ingredients to generate the important theorems and to evaluate (a V b), (a & b) and

Primitive notions: "object" (existence), "is the same as"

Non-axiomatic sign: Defined as: ≡ (is identical to by definition).

- existence: ∃

- variables: letters {a, b, ... z, ...},

- connective-symbols: V (OR), & (AND), ~ (NOT).

- Predicate symbol: = (equates, "is the same as", used for assignment of a name to a formula)

- parentheses: ), (

Concatenation-formation rules: An object is created concatenation of symbols into a "symbol string".

- Example: ))&~(ab))V) is a string of symbols (This string is not well-formed per the process to be described below.)

Well-formed formula wff: The rules that dictate what is and is not a well-formed formula are, alternately called "the grammar", or "the syntax". The rules must be absolutely mechanical and must leave no chance for ambiguity. They proceed by an induction process that begins with a basis (i) and is followed by formation rule (ii). A third rule -- substitution -- is also required in this system.

- The following system -- based on the objects ~(a)=vi and (a R2 b)=vi -- is an example and by no means the only possible system. Another system can be based upon objects vi=R1(a) and vi=R2(a, b) where R1 is a binary relation e.g. the ordered-pair (a,c), and R2 is a ternary relation, i.e. ((a,b), d).

Definition of well-formed formula:

- (i) If s is a variable then (s)=s is a formula;

- (ii) if vi is a variable and s and t are formulas then ~(s)=vi is a formula, then (s V t)=vi, (s & t)=vi, (s → t)=vi, (s ←→ t)=vi are formulas.

- (iii) The only formulas are those given by rules (i) and (ii).

Substitution rule: Substitution is the method by which one plugs a constant or a formula inside a formula in place of a variable. The rule is: Throughout the formula, wherever the variable-to-be-substituted is found, the substitution of the formula or constant for the variable must take place.

Development of the axioms: If "nothing" (emptiness) is symbolized by the sign "0" (the empty set) and "all" (fullness, completeness) is symbolized by the sign "1" then the law of contradiction can be used to define the notion of nothing and the law of excluded middle can be used to define the notion of all:

- (2) Incompatibility (contradiction): (O & ~(O)) ≡ 0

- (3) Law of excluded middle: (O V ~(O)) ≡ 1

Axioms (Huntington 1908, Suppes 1957:204, McCluskey 1965:85):

- 0 Existence: ∃(a)∃(b) a ≠ b

- 1 Right-hand identity: (a V 0) = a

- 2 Right-hand identity: (a & 1) = a

- 3 Commutative law for V: (a V b) = (b V a)

- 4 Commutative law for &: (a & b) = (b & a)

- 5 Distributive law: (a & (b V c) = ((a & b) V (a & c))

- 6 Distributive law: (a V (b & c) = ((a V b) & (a V c))

- 7 Law of excluded middle: (a V ~(a)) = 1

- 8 law of contradiction:(a & ~(a)) = 0

Major theorems derived from this set of axioms:

- 9 Absorption, idempotency: (a V a) = a

- 10 Absorption, idempotency: (a & a) = a

- 11 Null element: (a V 1) = 1

- 12 Null element: (a & 0) = 0

- 13 (0 ≠ 1)

- 14 Double negation: ~(~(a)) = a

- 18 Associative law: ((a V b) V c) = (a V (b V c))

- 19 Associative law: ((a & b) & c) = (a & (b & c))

- 23 DeMorgan's law: ~(a V b) = ~(a) V ~(b))

- 24 DeMorgan's law: ~(a & b) = ~(a) V ~(b))

Example: Prove formula #9:

- Start with 1: (a V 0) = a

- Substitute 7 into 1: (a V (a & ~a)) = a

- By 5 (distributive law): ((a V a) & (a V ~a)) = a

- By 7 (LoEM: (a V ~a)=1): ((a V a) & 1) = a

- Substitute u for (a V a): (u & 1) = a

- By 2 (identity): (u & 1) = u = a

- By substitution of (a V a)=u: (a V a) = a. Q.E.D.

Example: Evaluate (a & b) for a = 0, b = 1:

- Prove the theorems 9, 10, 11, 12, 18, and 19 and use these in the following:

- ((a=0) & (b=1)) =?

- Into the above for 0 and 1, substitute (a & ~(a))=0 and (a V (~a))=1 : ((a & ~(a)) & (a V (~a)) = ( 0 & (a V ~(a))) =?

- By 6 (distributive law): ((0 & a) V (0 & ~(a)) =?

- By 4 (commutation): ((a & 0) V (~(a) & 0)) =?

- By substitution: ((a & 0) V (u & 0)) =?

- By 12 (null element): ((a & 0) V 0) =?

- By substitution: ( w & 0) =?

- By 12 (null element): 0 =?

Synthesis of a formula by substitution: Example

[edit]The following example works for either system. Given the collection of formulas derived from either speech (example: c = "It's noon", d = "It's a nice day", p = "I have food", q = "We're going picnicking" ) or from engineering (example: c = "Clock-signal is active", d = "Motor-start switch is closed", p = "Motor-TEMP okay", q = "Power is ON to motor" ) the following set of simple formulas will be used to derive a final formula q:

- { ~(d) = u, (c & d) = s, (c & u ) = r, ~(r) = v, (p & v) = q, (s V w) = q }

- Substitute ~(d)=u for u in (c & u): (c & ~(d))=r.

- Substitute (c & ~(d))=r for r in ~(r)=v: ~((c & ~(d)))=v

- Substitute ~((c & ~(d))=v for v in (p & v): (p & ~((c & ~(d)))) = w.

- Substitute (p & ~((c & ~(d))))=w for w in (s V w): (s V (p & ~((c & ~(d))))) = q

- Substitute (c & d)=s in for s in (s V (p & ~((c & ~(d))))) = q:

- ((c & d) V (p & ~((c & ~(d))))) = q

- Substitute (c & d)=s in for s in (s V (p & ~((c & ~(d))))) = q:

Well-formed formula (wff)

[edit]Some observations are possible about a well-formed formula assembled per a grammar such as one described above.[ref> Enderton sketches an algorithm that constructs an upside-down tree with the formula at the root; its leaves will be the variables in the formula, e.g. (c, d, q, c, d) and these leaves point upward to the first connectives, etc. (Enderton 2002:17 and 29ff).</ref> (i) The left end is enclosed in a (, the right end by a ) followed by =, (ii) The parentheses come in pairs, and (iii) there is an equal number of left ( and right ) parentheses. With respect to the other symbols, ~s, ~&, ~V, &&, &V, VV, V&, &s, Vs are not allowed ("s" is any variable).

Example: A simple parenthesis checker (does not test for errors such as " ~V " and is easily fooled) starts at the left end of the symbol string and sets a counter to 0. If the left-most symbol is not ( then error else add 1 to counter and continue. Proceed to the right, ignoring all symbols but ( and ); add 1 each time a ( occurs, and subtract 1 if ) occurs. If the count goes to 0, check to see that an = sign is the next symbol to the right else error.

This method locates the "deepest-in" pair(s) of parentheses. It also locates the principal (outermost) connective, V in this example, which has a count of 1 (the same count as the outer parentheses).

Example:

| ( | ( | c | & | d | ) | V | ( | q | & | ~ | ( | c | & | ~ | ( | d | ) | ) | ) | ) | = | q2 | ||

| count | 0 | 1 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 3 | 3 | 3 | 3 | 4 | 4 | 3 | 2 | 1 | 0 | 0 |

Evaluating a wff

[edit]An evaluation in a deductive system assigns "values" to the variables (the propositions). In n-ary (e.g. ternary or 3-symbol) systems v variables will create tables with nv rows.

Binary (two-symbol) evaluations

[edit]Either a small set of axioms can be used to define the behavior of the symbols, or truth tables for each symbol can be used.

Axioms based on NOT and OR

[edit]- Axiom: a = a

- Axiom: ~(1) = 0

- Axiom: ~(0) = 1

- Axiom: (a V a) = a ; thus (0 V 0) = 0, (1 V 1) = 1

- Axiom: (~(a) V a) = 1 ; thus (~(0) V 0) = 1, (~(1) V 1) = 1

- Definition: ( a & b ) ≡ ~(~(a) V ~(b))

- Definition: ( a → b ) ≡ (~(a) V b)

Axioms based on NOT and AND

[edit]- Axiom: a = a

- Axiom: ~(T) = F

- Axiom: ~(F) = T

- Axiom: (a & a) = a ; thus (T & T) = T, (F & F) = F

- Axiom: ~(~(a) & a) = 1 ; thus ~(~(0) & 0) = 1, ~(~(1) & 1) = 1

- Definition: ( a V b ) ≡ ~(~(a) & ~(b))

- Definition: ( a → b ) ≡ (~(a) V b)

Axioms based on truth table definitions

[edit]In a binary (i.e. two-symbol) evaluation, any two unique symbols can work. In general, v variables create tables with 2v rows. If a value is not known in a binary evaluation, it may be written as "u" or if not important, as "d" (don't care); but a truth table is still required with a full assignment of all combinations of variable-value assignments.

The primitive notion of NOT results in the two axioms ~※ ≡ ℥, and ~℥ ≡ ※ that indicate that only two symbols will be allowed in the system. 16 operators (connectives) with 2 symbols as input and one output are possible:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | ~7 | ~6 | ~5 | ~4 | ~3 | ~2 | ~1 | ~0 | ||

| incompatibility | (a "+" b) | equivalence | tautology | ||||||||||||||

| (a NOR b) | NOT(a) | (a XOR b) | (a NAND b) | (a AND b) | (a XNOR b) | (a) | (a IMPLIES b) | (b) | (b IMPLIES a) | (a OR b) | |||||||

| a | b | ~(a V b) | ~(b) | ~(a) | (a ⊕ b) | b) | (a & b) | ~(a ⊕ b) | (a) | (a → b) | (b) | (a V b) | |||||

| 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 |

| 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 |

| 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 |

| 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| (~a & a) | ~(~a & a) |

Evaluation: The first thing one needs is values i.e. constants for the variables. If they are known, these constants (e.g. T or F, or 1 or 0) are substituted for their variables one after another until all variables are accounted for. If a variable has no value, the formula is indeterminate. But the formula can be repeated and T plugged into the first formula and F plugged into the second formula and thereby produce both evaluations. In the extreme, where all 2n combinations of n variables are presented in one table, this is the method of truth tables.

Evaluation procedes by finding the "inner-most" [term(s), expression(s)] and evaluating it (or them) from e.g. left to right if there are more than one. Given the evaluation, the new inner-most [term](s) must be again found by a process similar to the parenthesis checker and evaluated. This process is repeated until the last evaluation is complete.

- Example: Given that (p, d, c) = (1, 1, 0) and the formula ((c & d) V (p & ~((c & ~(d)))))=q, find the value of q:

- Plug in all the constants for their variables: ((0 & 1) V (1 & ~((0 & ~(1)))))=q

- As found by the parenthesis algorithm, the innermost formula is u = ~(d) = ~(1) = 0

- ((0 & 1) V (1 & ~((0 & 0))))=q

- As found by a 2nd application of the parenthesis algorithm, the innermost formula now is r=(0 & 0)= 0

- ((0 & 1) V (1 & ~((0))))=q

- As found by a 3rd application of the parenthesis algorithm, the innermost formula is (0) = 0:

- ((0 & 1) V (1 & ~(0)))=q

- As found by a 4rd application of the parenthesis algorithm, the innermost formula is ~(0) = 1:

- ((0 & 1) V (1 & 1))=q

- As found by a 5th application of the parenthesis algorithm, there are two innermost formulas. Start with the one on the left: (0 & 1)=0

- (0 V (1 & 1))=q

- Evaluate the formula on the right: (1 & 1) = 1

- (0 V 1)=q

- 6th application of the parenthesis algorithm locates (0 V 1) = 1; this ends the evaluation.

- 1=q

| Step: | Description: | s | q | w | v | r | u | |||||||||||||||||||||

| 1 | Start with known wff | ( | ( | c | & | d | ) | V | ( | p | & | ~ | ( | ( | c | & | ~ | ( | d | ) | ) | ) | ) | ) | = | q | ||

| 2 | Plug in values for variables | ( | ( | 0 | & | 1 | ) | V | ( | 1 | & | ~ | ( | ( | 0 | & | ~ | ( | 1 | ) | ) | ) | ) | ) | = | q | ||

| 3a | count parentheses | count | 0 | 1 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 3 | 4 | 4 | 4 | 4 | 5 | 5 | 4 | 3 | 2 | 1 | 0 | 0 | |

| 3b | Find deepest formula e.g. u = ~(d) = ~(1): | ( | ( | 0 | & | 1 | ) | V | ( | 1 | & | ~ | ( | ( | 0 | & | ~ | ( | 1 | ) | ) | ) | ) | ) | = | q | ||

| 3c | Evaluate "deepest formula" e.g. u = 0: | 0 | ||||||||||||||||||||||||||

| 4a | Count parentheses: | count | 0 | 1 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 3 | 4 | 4 | 4 | 4 | 3 | 2 | 1 | 0 | 0 | ||||

| 4b | Find new "deepest formula": | ( | ( | 0 | & | 1 | ) | V | ( | 1 | & | ~ | ( | ( | 0 | & | 0 | ) | ) | ) | ) | = | q | |||||

| 4c | Evaluate new "deepest formula": | 0 | ||||||||||||||||||||||||||

| 5a | Count parentheses: | count | 0 | 1 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 3 | 3 | 2 | 1 | 0 | 0 | ||||||||

| 5b | Find new "deepest formula": | ( | ( | 0 | & | 1 | ) | V | ( | 1 | & | ~ | ( | 0 | ) | ) | ) | = | q | |||||||||

| 5c | Evaluate new "deepest formula": | 0 | ||||||||||||||||||||||||||

| 6a | Count parentheses: | count | 0 | 1 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 3 | 3 | 2 | 1 | 0 | 0 | ||||||||

| 6b | Find new "deepest formula": | ( | ( | 0 | & | 1 | ) | V | ( | 1 | & | ~ | ( | 0 | ) | ) | ) | = | q | |||||||||

| 6c | Evaluate new "deepest formula": | 1 | ||||||||||||||||||||||||||

| 7a | Count parentheses: | count | 0 | 1 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 1 | 0 | 0 | |||||||||||

| 7b | Find new "deepest formula": | ( | ( | 0 | & | 1 | ) | V | ( | 1 | & | 1 | ) | ) | = | q | ||||||||||||

| 7c | Evaluate new "deepest formula": | 0 | ||||||||||||||||||||||||||

| 8a | Count parentheses: | count | 0 | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 1 | 0 | 0 | |||||||||||||||

| 8b | Find new "deepest formula": | ( | 0 | V | ( | 1 | & | 1 | ) | ) | = | q | ||||||||||||||||

| 8c | Evaluate new "deepest formula": | 1 | ||||||||||||||||||||||||||

| 9a | Count parentheses: | count | 0 | 1 | 1 | 1 | 1 | 0 | ||||||||||||||||||||

| 9b | Find new "deepest formula": | ( | 0 | V | 1 | ) | = | q | ||||||||||||||||||||

| 9c | Evaluate new "deepest formula": | 1 | = | q |

The above example is evaluated in the 6th row of the truth-table shown below.

Truth table-analysis: A truth table is just a generalization of the above example. It creates 2n rows, one for each unique combination of variable-values, where n is the number of variables:

| TEMP okay | switch closed | clock active | motor ON | |||||||||||||||||||||

| food | nice day | noon | picnic | |||||||||||||||||||||

| s | q | w | v | r | u | |||||||||||||||||||

| row | p | d | c | ( | ( | c | & | d | ) | V | ( | p | & | ~ | ( | c | & | ~ | ( | d | ) | ) | ) | ) |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | ||||||||||

| 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | ||||||||||

| 2 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | ||||||||||

| 3 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | ||||||||||

| 4 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | ||||||||||

| 5 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 0 | ||||||||||

| 6 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | ||||||||||

| 7 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 |

Reduction to normal form

[edit]

> Requires some tools: truth table and indirectly Hasse diagram, and Karnaugh map.

> Karnaugh map: for small number of variables (6 or less) the use of Karnaugh maps. A Karnaugh map is a flattened (i.e. 2-dimensional) Hasse diagram.

Literal, term and alterm

[edit]In electrical engineering a variable v or its negation ~(v) is lumped together into a single notion called a literal. A string of literals connected by AND is called a term. A string of literals connected by OR is called an alterm. Typically the literal ~(v) is abbreviated ~v.

- Example: a, b, c, d are variables. ((( a & ~(b) ) & ~(c)) & d) is a term. This can be abbreviated as (a & ~b & ~c & d).

- Example: p, q, r, s are variables. (((p & ~(q) ) & r) & ~(s) ) is an alterm. This can be abbreviated as (p V ~q V r V ~s).

In the same way that a 2n-row truth table displays the valuation of a propositiional formula for all 2n, n variables produces a 2n-square Karnaugh map (even though we cannot draw it). For example, 3 variables produces 23 = 8 squares ; each of corresponding truth-table row and Karnaugh-map square represents one minterm. Thus 4 variables produces 16 rows and 16 squares, and thus 16 minterms. Any propositional formula can be reduced to a "logical sum" (OR) of the active (i.e. "1"- or "T"-valued) minterms. When in this form the formula is said to be in disjunctive normal form.

In the following table, observe the peculiar sequence of rows: (0, 1, 3, 2, 6, 7, 5, 4, 0). the first column is the decimal equivalent of the binary equivalent of the digits cba,

- Example: cba2 = c*22 + b*21 + a*20:

- cba = (c=1, b=0, a=0) = 1012 = 1*22 + 0*21 + 1*20 = 510

This sequence comes about because in each row, only one variable changes at a time. Gray code is derived from this notion. It can be extended to renderings of three and four-dimensional objects called Hasse diagrams. Hasse diagrams flattened into two dimensions these are Karnaugh maps.

| decimal equivalent of (c, b, a) | c | b | a | minterm |

| 0 | 0 | 0 | 0 | (~c & ~b & ~a) |

| 1 | 0 | 0 | 1 | (~c & ~b & a) |

| 3 | 0 | 1 | 1 | (~c & b & a) |

| 2 | 0 | 1 | 0 | (~c & b & ~a) |

| 6 | 1 | 1 | 0 | (c & b & ~a) |

| 7 | 1 | 1 | 1 | (c & b & a) |

| 5 | 1 | 0 | 1 | (c & ~b & a) |

| 4 | 1 | 0 | 0 | (c & ~b & ~a) |

| 0 | 0 | 0 | 0 | (~a & ~b & ~c) |

Complex formulas such as those for binary adders typically begin with a stage that decode the input binary code into its minterms. Any propositional formula can be reduced to its conjuctive normal form.

Reduction by use of the Map method (Veitch, Karnaugh)

[edit](1) Produce the formula's truth table

[edit]Produce the formula's truth table. Number its rows using the binary-equivalents of the variables (usually just sequentially 0 through n-1) for n variables.

- Technically, the propositional function has been reduced to its (unminimized) conjunctive normal form: each row has its minterm expression and these can be OR'd to produce the formula in its (unminimized) conjunctive normal form.

Example: ((c & d) V (p & ~(c & (~d)))) = q in conjuctive normal form is:

- ( (~p & d & c ) V (p & d & c) V (p & d & ~c) V (p & ~d & ~c) ) = q

However, this formula be reduced both in the number of terms (from 4 to 3) and in the total count of its literals (12 to 6).

| row | Minterms | p | d | c | ( | ( | c | & | d | ) | V | ( | p | & | ~ | ( | ( | c | & | ~ | ( | d | ) | ) | ) | ) | ) | Active minterms | Formula in conjunctive normal form |

| 0 | ( ~p & ~d & ~c ) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | ||||||||||||||

| 1 | ( ~p & ~d & c) | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | ||||||||||||||

| 2 | ( ~p & d & ~c ) | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | ||||||||||||||

| 3 | ( ~p & d & c ) | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | (~p & d & c) | |||||||||||||

| 4 | ( p & ~d & ~c ) | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | (~p & d & c) | |||||||||||||

| 5 | ( p & ~d & c ) | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 0 | ||||||||||||||

| 6 | ( p & d & ~c ) | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | (p & d & ~c) | |||||||||||||

| 7 | ( p & d & c ) | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | ( p & d & c ) | |||||||||||||

| q | = (~p&d&c) V (~p&d&c) V (p&d&~c ) V (p&d&c ) |

(2) Create the formula's Karnaugh map

[edit]Use the values of the formula (e.g. "p") found by the truth-table method and place them in their into their respective (associated) Karnaugh squares. If values of "d" for "don't care" appear in the table, this adds flexibility during the reduction phase.

| (~d & ~c) | (~d & c) | (d & c) | (d & ~c) | (~d & ~c) | (~d & c) | (d & c) | (d & ~c) | |||

| ~d | ~d | d | d | ~d | ~d | d | d | |||

| ~p | row 0 | row 1 | row 3 | row 2 | ~p | 0 | 0 | 1 | 0 | |

| p | row 4 | row 5 | row 7 | row 6 | p | 1 | 0 | 1 | 1 | |

| ~c | c | c | ~c | ~c | c | c | ~c |

(3) Reduce minterms

[edit]Minterms of adjacent (abutting) 1-squares (T-squares) can be reduced with respect to the number of their literals. For example, squares #3 and #7 abut. These two abutting squares can loose one literal (e.g. "p" from squares #3 and #7), four squares in a rectangle or square loose two literals, eight squares in a rectangle loose 3 literals, etc. (One seeks out the largest square or rectangles.) This process continues until all abutting squares are accounted for, at which point the propositional formula is said to be minimized.

Example: The map method usually is done by inspection. The following example expands the algebraic method to show the "trick" behind the combining of terms on a Karnaugh map:

- Minterms #3 and #7 abut, #7 and #6 abut, and #4 and #6 abut (because the table's edges wrap around). So each of these pairs can be reduced.

Observe that by the Idempotency law (A V A) = A, we can create more terms. Then by association and distributive laws the variables to disappear can be paired, and then "disappeared" with the Law of contradiction (x & ~x)=0. The following uses brackets [ and ] only to keep track of the terms; they have no special significance:

- Put the formula in conjuctive normal form with the formula to be reduced:

- q = ( (~p & d & c ) V (p & d & c) V (p & d & ~c) V (p & ~d & ~c) ) = ( #3 V #7 V #6 V #4 )

- Idempotency (absorption) [ A V A) = A:

- ( #3 V [ #7 V #7 ] V [ #6 V #6 ] V #4 )

- Associative law (x V (y V z)) = ( (x V y) V z )

- ( [ #3 V #7 ] V [ #7 V #6 ] V [ #6 V #4] )

- [ (~p & d & c ) V (p & d & c) ] V [ (p & d & c) V (p & d & ~c) ] V [ (p & d & ~c) V (p & ~d & ~c) ].

- Distributive law ( x & (y V z) ) = ( (x & y) V (x & z) ) :

- ( [ (d & c) V (~p & p) ] V [ (p & d) V (~c & c) ] V [ (p & ~c) V (c & ~c) ] )

- Commutative law and law of contradiction (x & ~x) = (~x & x) = 0:

- ( [ (d & c) V (0) ] V [ (p & d) V (0) ] V [ (p & ~c) V (0) ] )

- Law of identity ( x V 0 ) = x leading to the reduced form of the formula:

- q = ( (d & c) V (p & d) V (p & ~c) )

(4) Verify reduction with a truth table

[edit]| row | Minterms | p | d | c | ( | ( | d | & | c | ) | V | ( | p | & | d | ) | V | ( | p | & | ~ | ( | c | ) | ) |

| 0 | ( ~p & ~d & ~c ) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |||||||||

| 1 | ( ~p & ~d & c) | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | |||||||||

| 2 | ( ~p & d & ~c ) | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | |||||||||

| 3 | ( ~p & d & c ) | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | |||||||||

| 4 | ( p & ~d & ~c ) | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | |||||||||

| 5 | ( p & ~d & c ) | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | |||||||||

| 6 | ( p & d & ~c ) | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | |||||||||

| 7 | ( p & d & c ) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | |||||||||

| q |

Director's cut: Propositional formula with "feedback"

[edit]The notion of a propositional formula appearing as one of its own variables requires a formation rule that allows the assignment of the formula to a variable. In general there is no stipulation (either axiomatic or truth-table systems of objects and relations) that forbids this from happening. [4]

The simplest case occurs when an OR formula becomes one its own inputs e.g. p = q. Begin with (p V s) = q, then let p = q. Observe that q's "definition" depends on itself "q" as well as on "s" and the OR connective; this definition of q is thus impredicative. Either of two conditions can result[5]: oscillation or memory.

Without knowledge of what is going on “inside” the formula, it would appear (from the "outside") as if the the output is no longer a function of the inputs alone. That is, sometimes when looks at q one sees 0 and other times 1. To avoid this problem one has to know the state (condition) of the "hidden" variable p (i.e. the value of q fed back and assigned to p). When this is known the apparent inconsistency goes away.

To understand [predict] the behavior of formulas with feedback requires the more sophisticated analysis of sequential circuits. Propositional formulas with feedback lead, in their simplest form, to state machines; they also lead to memories in the form of Turing tapes and counter-machine counters. From combinations of these elements one can build any sort of bounded computational model (e.g. Turing machines, counter machines, register machines, Macintosh computers, etc).

Oscillation

[edit]In the abstract (ideal) case the simplest oscillating formula is a NOT fed back to itself: ~(~(p=q)) = q. Analysis of an abstract (ideal) propositional formula in a truth-table reveals an inconsistency for both p=1 and p=0 cases: When p=1, q=0, this cannot be because p=q; ditto for when p=0 and q=1.

| q | |||||||

| p | ~ | ( | p | ) | = q | ||

| 0 | 1 | 0 | 1 | q & p inconsistent | |||

| 1 | 0 | 1 | 0 | q & p inconsistent |

Oscillation with delay: If an delay[6] (ideal or non-ideal) is inserted in the abstract formula between p and q then p will oscillate between 1 and 0: 101010...101... ad infinitum. If either of the delay and NOT are not abstract (i.e. not ideal), the type of analysis to be used will be dependent upon the exact nature of the objects that make up the oscillator; such things fall outside mathematics and into engineering.

Analysis requires a delay to be inserted and then the loop cut between the delay and the input "p". The delay must be viewed as a kind of proposition that has "qd" (q-delayed) as output for "q" as input. This new proposition adds another column to the truth table. The inconsistency is now between "qd" and "p" as shown in red; two stable states resulting:

| q | ||||||||

| qd | p | ( | ~ | ( | p | ) | = q | |

| 0 | 0 | 1 | 0 | 1 | state 1 | |||

| 0 | 1 | 0 | 1 | 0 | qd & p inconsistent | |||

| 1 | 0 | 1 | 0 | 1 | qd & p inconsistent | |||

| 1 | 1 | 0 | 1 | 0 | state 0 |

Memory

[edit]

Without delay inconsistencies must be eliminated from a truth table analysis. With the notion of “delay”, this condition presents itself as a momentary inconsistency between the fed-back output variable q and p = qdelayed.

A truth table reveals the rows where inconsistencies occur between p = qdelayed at the input and q at the output. After "breaking" the feed-back[7], the truth table construction proceeds in the conventional manner. But afterwards, in every row the output q is compared to the now-independent input p and any inconsistencies between p and q are noted (i.e. p=0 together with q=1, or p=1 and q=0); when the "line" is "remade" both are rendered impossible by the Law of contradiction ~(p & ~p)). Rows revealing inconsistences are either considered transient states or just eliminated as inconsistent and hence "impossible".

Once-flip memory

[edit]About the simplest memory results when the output of an OR feeds back to one of its inputs, in this case output "q" feeds back into "p". Given that the formula is first evaluated (initialized) with p=0 & q=0, it will "flip" once when "set" by s=1. Thereafter, output "q" will sustain "q" in the "flipped" condition (state q=1). This behavior, now time-dependent, is shown by the state diagram to the right of the once-flip.

| q | ||||||||

| p | s | ( | s | V | p | ) | = q | |

| 0 | 0 | 0 | 0 | 0 | 0 | state 0, s=0 | ||

| 0 | 1 | 1 | 1 | 0 | q & p inconsistent | |||

| 1 | 0 | 0 | 1 | 1 | 1 | state 1 with s = 0 | ||

| 1 | 1 | 1 | 1 | 1 | 1 | state 1 with s = 1 |

Flip-flop memory

[edit]The next simplest case is the "set-reset" flip-flop shown below the once-flip. Given that r=0 & s=0 and q=0 at the outset, it is "set" (s=1) in a manner similar to the once-flip. It however has a provision to "reset" q=0 when "r"=1. And additional complication occurs if both set=1 and reset=1. In this formula, the set=1 forces the output q=1 so when and if (s=0 & r=1) the flip-flop will be reset. Or, if (s=1 & r=0) the flip-flop will be set. In the abstract (ideal) instance in which s=1 => s=0 & r=1 => r=0 simultaneously, the formula q will be indeterminate (undecidable). Due to delays in "real" OR, AND and NOT the result will be unknown at the outset but thereafter predicable.

| q | ||||||||||||||||

| p | s | r | ( | s | V | ( | p | & | ~ | ( | r | ) | ) | ) | = q | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | state 0 with ( s=0 & r=0 ) | ||||||

| 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | state 0 with ( s=0 & r=1 ) | ||||||

| 0 | 1 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | q & p inconsistent | |||||||

| 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | q & p inconsistent | |||||||

| 1 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 1 | state 1 with ( s=0 & r=0 ) | ||||||

| 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | q & p inconsistent | |||||||

| 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | state 1 with ( s=1 & r=0 ) | ||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | state 1 with s & r simultaneously 1 |

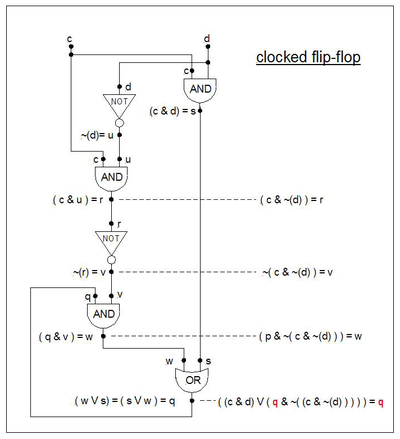

Clocked flip-flop memory

[edit]The formula known as "clocked flip-flop" memory ("c" is the "clock" and "d" is the "data") is given below. It works as follows: When c = 0 the data d (either 0 or 1) cannot "get through" to affect output q. When c = 1 the data d "gets through" and output q "follows" d's value. When c goes from 1 to 0 the last value of the data remains "trapped" at output "q". As long as c=0, d can change value without causing q to change.

Example: ( ( c & d ) V ( p & ( ~( c & ~( d ) ) ) ) = q, but now let p = q:

- Example: ( ( c & d ) V ( q & ( ~( c & ~( d ) ) ) ) = q

The state diagram is similar in shape to the flip-flop's state diagram, but with different labelling on the transitions.

| s | q | w | v | r | u | |||||||||||||||||||||||

| row | q | d | c | ( | ( | c | & | d | ) | V | ( | q | & | ~ | ( | ( | c | & | ~ | ( | d | ) | ) | ) | ) | ) | =q | Description |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | state 0 with ( s=0 & r=0 ), 0 is trapped | ||||||||||||

| 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | state 0 with ( d=0 & c=1 ): q=0 is following d=0 | ||||||||||||

| 2 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | state 0 with ( d=1 & r=0 ), 0 is trapped | ||||||||||||

| 3 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | q & p inconsistent | |||||||||||||

| 4 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | state 1 with (d =0 & c=0 ), 1 is trapped | ||||||||||||

| 5 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 0 | q & p inconsistent | |||||||||||||

| 6 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | state 1 with (d =1 & c=0 ), 1 is trapped | ||||||||||||

| 7 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | state 1 with ( d=1 & c=1 ): q=1 is following d=1 |

Historical development

[edit]Bertrand Russell (1912:74) lists three laws of thought that derive from Aristotle: (1) The law of identity: "Whatever is, is.", (2) The law of contradiction: "Nothing cannot both be and not be", and (3) The law of excluded middle: "Everything must be or not be."

- Example: Here O is an expression about an objects BEING or QUALITY:

- (1) Law of Identity: O = O

- (2) Law of contradiction: ~(O & ~(O))

- (3) Law of excluded middle: (O V ~(O))

The use of the word "everything" in the law of excluded middle renders Russell's expression of this law open to debate. If restricted to an expression about BEING or QUALITY with reference to a finite collection of objects (a finite "universe of discourse") -- the members of which can be investigated one after another for the presence or absence of the assertion -- then the law is considered intuitionistically appropriate. Thus an assertion such as: "This object must either BE or NOT BE (in the collection)", or "This object must either have this QUALITY or NOT have this QUALITY (relative to the objects in the collection)" is acceptable. See more at Venn diagram.

Although a propositional calculus originated with Aristotle, the notion of an algebra applied to propositions had to wait until the early 1800's. In an (adverse) reaction to the 2000 year tradition of Aristotle's syllogisms, John Locke's Essay concerning human understanding (1690) used the word semiotics (theory of the use of symbols). By 1826 Richard Whately had critically analyzed the syllogistic logic with a sympathy torward Locke's semiotics. George Bentham's work (1827) resulted in the notion of "quantification of the predicate" (1827) (nowdays symbolized as ∀ ≡ "for all"). A "row" instigated by William Hamilton over a priority dispute with De Morgan "inspired George Boole to write up his ideas on logic, and to publish them as MAL [Mathematical Analysis of Logic] in 1847" (Grattin-Guinness and Bornet 1997:xxviii).

About his contribution Grattin-Guinness and Bornet comment:

- "Boole's principal single innovation was [the] law (6.3)2 (i.e. xn = x) for logic: it stated that the mental acts of choosing the property x and choosing x again and again is the same as choosing x once... As consequence of it he formed the equations x•(1-x)=0 and x+(1-x)=1 which for him expressed respectively the law of contradiction and the law of excluded middle." For Boole "1" was the universe of discourse and "0" was nothing. (p. xxviiff)

Frege's massive undertaking resulted in a formal calculus of propositions, but his symbolism is so daunting that it had little influence excepting on one person: Bertrand Russell. First as the student of Alfred North Whitehead he studied the Frege's work and made a (famous, notorious) ememdation with respect to it (1904). This work led to a collatoration with Whitehead that, in the year 1912, produced the first volume of Principia Mathematica. It is here that, what we consider "modern" propositional logic, first appeared. In particular, PM introduces the notions of NOT and OR and the assertion symbol ⊦ as primitives. In terms of these notions they defined IMPLICATION → ( def. *1.01: ~p V q ), then AND (def. *3.01: ~(~p V ~q) ), then EQUIVALENCE p ←→ q (*4.01: (p → q) & ( q → p ) ).

Computation and switching logic:

- William Eccles and F. W. Jordan (1919) describe a "trigger relay" made from a vaccuum tube

- George Stibitz (1937) invents the binary adder using relays

- Example: Given binary bits ai and bi and carry_ini their summation Σi and carry_outi are:

- ( ( ai XOR bi ) XOR carry_ini )= Σi

- ( ai & bi ) V carry_ini ) = carry_outi;

- Alan Turing builds a multiplier using relays (1937-1938)

- Textbooks about "switching circuits" appear in early 1950's

- Willard Quine 1952, 1955 E. W. Veitch 1952 and M. Karnaugh (1953) develop map-methods for simplifying propositional functions

- E. J. McCluskey and H. Shorr develop a method for simplifying circuits

Footnotes

[edit]- ^ PM p. 91 eschews "the" because they require a clear-cut "object of sensation"; they stipulate the use of "this" (p. 91)

- ^ NEITHER... NOR... is a form of DeMorgan's law that asserts (A NAND B): (~A V ~B) = ~(A & B).

- ^ Both NOT and AND can be combined into a single connective such as NAND ( NOT "a AND b" )) but the resulting formulas are unwieldy. Ditto for NOT and OR.

- ^ McCluskey comments that "it could be argued that the analysis is still incomplete because the word statement "The outputs are equal to the previous values of the inputs" has not been obtained"; he goes on to dismiss such worries because "English is not a formal language in a mathematical sense, [and] it is not really possible to have a formal procedure for obtaining word statements" (p. 185).

- ^ More precisely, given enough "loop gain", either oscillation or memory will occur (cf McCluskey p. 191-2). In abstract (idealized) mathematical systems adequate loop gain is not a problem.

- ^ The notion of delay and the principle of local causation as caused ultimately by the speed of light appears in Robin Gandy (1980), "Church's thesis and Principles for Mechanisms", in J. Barwise, H. J. Keisler and K. Kunen, eds., The Kleene Symposium, North-Holland Publishing Company (1980) 123-148. Gandy considered this to be the most important of his principles: "Contemporary physics rejects the possibility of instantaneous action at a distance" (p. 135). Gandy was Alan Turing's student and close friend.

- ^ McKlusky p. 194-5 discusses "breaking the loop" and inserts "amplifiers" to do this; Wickes (p. 118-121) discuss inserting delays. McCluskey p. 195ff discusses the problem of "races" caused by delays.

References

[edit]- E. J. McCluskey 1965, Introduction to the Theory of Switching Circuits, McGraw-Hill Book Company, NY. No ISBN. Library of Congress Catalog Card Number 65-17394. McCluskey was a student of Willard Quine and developed some notable theorems with Quine and on his own. For those interested in the history, the book contains a wealth of references .

- Herbert Enderton, A Mathematical Introduction to Logic: Second Edition.

- Patrick Suppes 1957 (1999 Dover edition), Introduction to Logic, Dover Publications, Inc., Mineola, New York. ISBN 0-486-40687-3 (pbk.). This book is in print and readily available.

- On his page 204 in a footnote he references his set of axioms to E. V. Huntington, "Sets of Independent Postulates for the Algebra of Logic," transacctions of the American Mathematical Socienty, Vol. 5 91904) pp. 288-309.

- Alfred Tarski 1941 (1995 Dover edition), Introduction to Logic and to the Methodology of Deductive Sciences, Dover Publications, Inc., Mineola, New York. ISBN 0-486-28462-X (pbk.). This book is in print and readily available.