User:ScotXW/Linux as platform

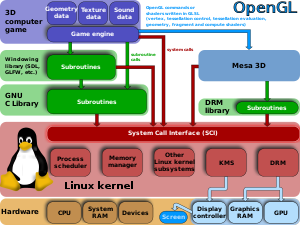

left: classes of computers

right: Linux kernel

far right: Software for Linux, especially graphical shells and GUI widget-libraries.

Linux kernel-based family of operating systems as open-source AND open platform for anybody anywhere (= for any hardware and for anybody who want's it).

Abstract

[edit]

What is the Linux platform for software developers?

[edit]- Linux API

- windowing system: Wayland + XWayland

- abstraction APIs for the desktop:

- libinput for input events (to be used by Wayland compositors)

- libcanberra for outputting event sounds (available on top or libALSA (aka libasound) as well as on top of PulseAudio!)

- PulseAudio – prevailing sound server for desktop use

- abstraction APIs for video game development: (cf. Linux as gaming platform)

- in Mesa 3D/AMD Catalyst/Nvidia GeForce driver: OpenGL / OpenGL ES / etc., in

- in Simple DirectMedia Layer/Simple and Fast Multimedia Library/OpenAL/etc. input and audio APIs (which are available cross-platform and not exclusive to Linux!)

- abstraction APIs for professional audio production:

- JACK Audio Connection Kit – prevailing sound server for professional audio production

- LADSPA & LV2 – prevailing APIs for plugins

What is the Linux platform for independent software vendors (ISVs) ?

[edit]- compile against: Linux ABI

- package for one of the available package management systems

- distribute via

- optical media and retailers

- distribute via online distribution available for Linux

What is the Linux platform for end-users?

[edit]- burn your installation DVD with Brasero or K3b or prepare your USB flash drive with Universal USB Installer, partition and format your hard disk with GParted, install via Debian-Installer, Anaconda, Ubiquity, etc.

- graphical: KDE Plasma 5 / GNOME Shell / Cinnamon / etc.

- command-line: bash

- use GNOME Software to discover and install more software

- making it complicated: Revisiting How We Put Together Linux Systems

Advantages and benefits of free and open-source software

[edit]Market share vs. installed base

[edit]Market share can be estimated based on sales numbers. E.g. during Q2 2013, 79.3% of smartphones sold worldwide were running Android.[3] When discussing Free software, the term "installed base" seems rather popular. It is installation, not embedment or pre-installation, that tailors a product to the owner's personal needs. Unfortunately, installed base, as opposed to market share, proves to be a tricky thing to gauge. However, the very wide adoption of the Linux kernel due to Android OS, should result in a nice network effect.

Hardware support

[edit]On the question, why Linux is not successful on desktop computers, Linus Torvalds said in August 2011:

| “ | On the desktop to be successful you have to support every possible piece of hardware, that the end-user could want to connect to his computer with a device driver; every printer needs to be supported... | ” |

| — Linus Torvalds, Linus Torvalds: Why Linux Is Not Successful On Desktop | ||

As a Linux user you still can't buy any hardware blindly and expect it to work out-of-the-box and with all the functionality advertised on the package. This circumstance can result in hours of Internet search to find the required hardware which also has comprehensive Linux support, for the Linux old-timer. For the Linux newbie, that regularly results in frustration and hours spend in user-support forums asking firstly inaccurately and then impolitely for help, then waiting a day to get help, because: the Linux newbie never checks before buying his printer. Then, the Linux newbie becomes a frustrated and/or disappointed ex-Linux newbie and switches back to Windows, that was payed for and came pre-installed anyway; after all, Windows does play Blu-Rays, you can browse the Internet, and also play DirectX-computer games.

- Missing or inadequate hardware support is a sound argument when we compare the available support of hardware vendors with device drivers for the Microsoft Windows family of operating systems, looking ATI's or Nvidia's Linux support for their graphics cards 14 years ago, or for sound card drivers.

- Linus' argument fails, when looking at the fact, that in October 2013 benchmarks testified the performance of Nivida's proprietary graphics device drivers on Linux to correspond with Windows 8.1![4]

- Linus's argument fails, when looking at OS X, which supports only its own hardware. But similar to Linux, OS X does struggle with printers, even to the point that Apple took over the development of CUPS! Also, the formerly IBM now Lenovo, ThinkPads (which are laptops and not desktop computers), have had very good Linux support for a longer time. So there have to be more reasons...

Other possible reasons for low market share

[edit]- maybe having a look at Direct Rendering Infrastructure#History

- maybe looking at the missing long-time stable Linux ABI

- maybe having a look at X11 "The X-Windows Disaster", X CCC2013: Server Security Disaster: "It's Worse Than It Looks"

- maybe User:ScotXW/Rendering APIs

See User:ScotXW/Linux as home computer platform for getting stuff done (or not) on you Linux Home PC

Linux kernel

[edit]The Linux kernel blends its key tasks nicely; its source-code offers right amount of abstraction, freedom to re-design and programmability to developers and the resulting binary offers stability, security and performance to end-users. If doing bandwidth vs. latency decisions then latency is the top goal. Yes we want to have bandwidth too – if possible. Ingo Molnár, http://lkml.iu.edu//hypermail/linux/kernel/9906.0/0746.html

Generally the Linux kernel is described a well designed and well written kernel, which gained some bloat along the way. It dominates supercomputers as well as smartphones and further embedded systems, but is is not particularly wide-spread on desktop computers. (Annotation: the WP should strive to explain its inner workings more professionally. The article Linux kernel is not good enough and the article Linux is simply crap, starting with the introduction.)

Interfaces of the Linux kernel

[edit]

Let's look at the interfaces of the Linux kernel. Let's put all the available interfaces into one of four groups:

- The Linux API:

- because only the kernel can access the hardware, user-space software has to ask the kernel to access hardware for it, or has to ask for permission to do it; that means the Linux API is used all the time, so it has to be as lean and efficient as possible; so far the Linux kernel developers have been very meticulous about mainlining new system calls

- the Linux API shall make the programming of software that runs effectively as easy and straight forward as possible

- the Linux API has been kept stable over decades

- because there are multiple protection rings and any state change costs cycles, state changes have to be kept to a minimum, e.g. by implementing marshalling where it offers an advantage

- The Linux ABI

- is of paramount importance to Independent software vendors (ISV) who sell proprietary software for Linux. There are a couple, see e.g. Category:Proprietary software for Linux.

- kernel-internal (APIs)

- stable in-kernel APIs are specifically not guaranteed by the Linux kernel developers; by not guaranteeing stable in-kernel APIs, the developers gain a lot of freedom to continue developing and evolving the Linux kernel

- kernel-internal (ABIs):

- since there are no stable APIs, there cannot be stable ABIs

- possible profiteers from stable in-kernel ABIs are hardware manufacturers, it would keep their proprietary kernel blobs (AMD Catalyst, Nvidia GeForce driver, etc.) portable to different version of the Linux kernel without the need to possibly adapt and recompile them for every kernel verson

API versus ABI

[edit]- Application programming interface (API) refers to source code. A stable APIs enables the portability of source code.

- Application binary interface (ABI) refers to machine code. A stable ABI enables the portability of binaries.

The Linux kernel has had a very stable kernel-to-userspace API. This means, that program A written for the Linux API, in the year 2000 for Linux kernel 2.4 can be successfully compiled "against" Linux kernel 3.16 ... but both have to compiled with the same options. In case program A is compiled with options, which induce incompatibilities, the resulting binary won't run equally well or at all!

E.g. the Debian software repositories contain 37500 packages with their source-code. Debian, or any other Linux distribution, don't have to care for binary portability since they deal exclusively with free and open-source software! You do not need to care about a stable Linux ABI, as long, as you do not want to use some proprietary software.

So IF somebody wanted an even bigger software eco-system for Linux, comprised of open-source software AND of proprietary freeware and commercial software, THEN this somebody would have to provide a stable Linux ABI, against which multiple releases of his/her Linux distribution as well as other Linux distributions can compile against and be binary compatible!

Independent software vendors (ISV), such as e.g. Siemens PLM Software, who sell NX (Unigraphics) do care about such a stable Linux ABI to ease their logistics. They can easily offer an NX version that runs on Debian (with commercial support). They can also offer one that runs on Red Hat Enterprise Linux or one that runs on SUSE Linux Enterprise Desktop. But without a stable Linux ABI-specification that all – Debian, RHEL, SUSE and NX – adhere to when they compile the software into binaries, it cannot be guaranteed, that one NX version will run as intended on all three Linux distributions. And providing commercial support multiple Linux versions for multiple Linux distributions is not feasible.

For Linux, there are e.g. the Linux Standard Base or x32 ABI, which tries to define a Linux ABI, that Linux distributions and ISV alike could compile against to finally achieve binary portability over all the Linux distributions that care about it.

Indeed when people talk about the "Win32 API", what they actually mean, is the "Win32 ABI". A binary which was compiled against the "Win32 ABI" will run on Win95 to Win7. The long-term stability of this ABI together with the fact, that Microsoft Windows had never been walled gardens (they are today!), had given the Microsoft Windows family of operating systems a huge competitive advantage. In the past, Microsoft rather concentrated on vendor lock-in by evangelizing the adoption of their proprietary DirectX API than on making Windows a walled garden.

Without a stable Linux ABI, Linux kernel-based operating systems are unnecessary hard to support for ISVs. That includes of course the video game industry! The GNU/Linux controversy is fruitless, and talking about a Linux operating system instead of the (very large and very widely adopted) Linux kernel-based family of operating system is just f*cking stupid.

- Stupid ISV advertise a "Linux" version of their proprietary binary-only software. Does this statement really mean, it will run on Debian, RHEL, Slackware, Gentoo, even OpenWrt?

- Less stupid ones, clearly state a certain ABI, i.d. Ubuntu 14.04, which means, their software, which you pay money for, will run guaranteed on that certain "OS binary package" and they provide a certain range of support for this guarantee. It could run on Debian or RHEL as well, but finding whether it does, and which quirks it has when doing so, is YOUR problem. This balkanizes (shatters) Linux for anybody who want's or needs to operate proprietary software on Linux.

But however inaptly ISVs advertise their Linux support, or however stupidly the Wikipedia explains this problem, a sane and stable Linux ABI would most probably help Linux adoption while not constraining developers too much, since the kernel-to-userspace API has been kept stable anyway. Designing and adhering to such a stable Linux ABI, is NOT TASK of the Linux kernel, but that of Linux distributions and ISVs. If more Linux end-users signal, they want such an ABI,... because they want to run commercial proprietary software on Linux (why else?) ... distributions and ISVs would have a bigger incentive to increase their common efforts. See the not free enough section.

What is POSIX?

[edit]- The POSIX API was created by the U.S. government solely for procurement purposes.

The U.S. federal procurements needed a way to legally specify the requirements for various sorts of calls for bids and contracts in a way that could be used to exclude systems to which a given existing code base would NOT be portable. - So, for example, Microsoft's Windows NT was written with enough POSIX conformance to qualify for some government call for bids ... even though the POSIX subsystem was essentially useless in terms of practical portability and compatibility with UNIX® systems.

- The POSIX API was written ex post facto to describe a loosely similar set of competing systems

- POSIX was NOT designed by a bunch of computer scientists/software engineers as a clean and efficient ("orthogonal") kernel-to-userspace API!

- A programming language can be orthogonal (e.g. Python) and an instruction set can also be orthogonal instruction set (e.g. m68k);

in case a kernel-to-userspace API can be specified as "orthogonal" as well, POSIX ain't it!

- A programming language can be orthogonal (e.g. Python) and an instruction set can also be orthogonal instruction set (e.g. m68k);

- Because there already is an abundance of software written for this API, it makes sense to implement this API. The focus of the development of the Linux API (i.e. the Linux kernel's System Call Interface plus the GNU C Library) had been to provide the usable features of POSIX in a way which is reasonably compatible, robust and performant. Both, the Linux kernel and the GNU C Library have long since implemented the valuable POSIX features. At least since this was achieved, new facilities, i.e. system calls in the Linux kernel and subroutines around them in the GNU C Library, are being programmed and mainlined. Writing only POSIX-compliant software and ignoring when a software would profit from the additional Linux-only facilities is the way to go, if somebody wanted to avoid innovation!

- Various other standards for UNIX® have been written over the decades. Things like the SPEC1170 (specified eleven hundred and seventy function calls which had to be implemented compatibly) and various incarnations of the SUS (Single UNIX Specification).

- For the most part these "standards" have been inadequate to any practical technical application. They most exist for argumentation, legal wrangling and other dysfunctional reasons.

- POSIX – Inside: A case study presented at the "Proceedings of the 38th Hawaii International Conference on System Sciences" in 2005.

- The Linux Programming Interface – by Michael Kerrisk

What is SUS (Single UNIX Specification)?

[edit]- The Single UNIX Specification (SUS) is a set of standards for an OS to be met, to qualify for the brand-name UNIX®.

How do POSIX and SUS (Single UNIX Specification) relate to one another?

[edit]The specification described by POSIX and SUS are identical. Cf. "POSIX.1-2008 is simultaneously IEEE Std 1003.1™-2008 and The Open Group Technical Standard Base Specifications, Issue 7" Looking at the top of the Open Group Base Specifications, you will see the standard identifier IEEE Std 1003.1-2008. IEEE Std 1003.1 is what is known as POSIX.1 with 2008 just being the latest incarnation.

Basically, POSIX is just the name of the standard developed by the IEEE with the initial version done in 1988. POSIX is not UNIX® officially simply because IEEE does not own the trademark, but it is the operating system API and environment found on UNIX® systems. Later on, The Open Group, who own the UNIX® trademark, got involved with creating a standard identical with POSIX called "Single UNIX Specification" version 2 or SUSv2. Now these two specifications are developed jointly as The Austin Group. The latest version is SUSv4. So, to sum up: POSIX:2008 = IEEE Std. 1003.1-2008 = SUSv4 = The Open Group Specification Issue 7.

UNIX®

[edit]UNIX® is registered trademark. It is used for certified operating systems compliant with the Single UNIX Specification.

Unix-like

[edit]The article Unix-like was added to the Wikipedia – additionally to the articles POSIX, Single UNIX Specification and UNIX – to describe operating systems, that are NOT SUS compliant... I don't mind calling Linux Unix-like, but an extra article is shit, because it add unnecessary surface to spread more half-knowledge and bullshit... and indeed, if you read all four articles, you will probably learn less, then if you read this rather un-encyclopedic-ly written article in my user-space...

UNIX® – not such a big deal, a look at OS X

[edit]

OS X is an officially certified UNIX® operating system.[5]. But OS X consist of considerable more components, than just the parts that are (and can possible be) UNIX-certified. E.g.

- Cocoa (API), Quartz, Quartz Compositor & XQuartz, Core Audio, etc.

- Aqua,

- their new "Metal API" to replace/augment their crappy OpenGL implementation

- launchd, etc.

Because of these additional components, OS X has very little to nothing in common with other UNIX and Unix-like operating systems! And maybe because of these additional parts OS X is successful.

Looking at the success of OS X, should Linux kernel-based operating systems

- blindly stick to POSIX, and only POSIX, even invest money to get a UNIX® certification or rather feel free to introduce new system calls, where they make sense; the Linux kernel developers are quite meticulous about this.

- then NOT use these new system calls, because they are not, or not yet, in POSIX? Because e.g. systemd or Weston do exactly that, and were criticized for doing so... whether a program should be written to be POSIX-compatible depends on the program! E.g. Lennart Poettering has a strong opinion regarding this. He designed and authored PulseAudio, which is POSIX-compatible and also systemd, which is not (because it uses fanotify, which is not (yet) in POSIX).

- stick with X11 or develop Wayland and push for its adoption?

- stick with X11 or go for DRI and Glamor? even if the BSDs will take a while to adopt the new ways?

- stick with the-old-ways™ or develop and adopt software like NetworkManager, PulseAudio and PackageKit?

- stick with Netfilter or try excel even this with nftables?

- stick with initd or develop systemd? even if the BSDs criticize this?

- hope that OpenGL 5 will suffice or advocate to port Mantle to Linux?

Conclusion: there is way too much talk about UNIX (or Unix-like) in the Wikipedia. POSIX-compliance is an advantage, but not such a big deal, as it may appear by spending time in the Wikipedia.

Wanna see benchmarks of Linux against OS X? Here you go: Ubuntu With Linux 3.16 Smashes OS X 10.9.4 On The MacBook Air

Mainlining your device driver in Linux kernel

[edit]Exempli gratia. products of Atheros, then Qualcomm Atheros, now Qualcomm Atheros-Wilocity, and products using these products, gained a competitive advantage because of the GNU General Public License (GPL)! The GPL-licensed Linux kernel and, Linux kernel being a monolithic kernel, device drivers for it, allowed somebody to FORCE Linksys to publish the entire source-code of their firmware, cf. Linksys WRT54G series#Third-party firmware projects. This made OpenWrt possible, and OpenWrt and derivatives of OpenWrt gives IC for which there are Comparison of open-source wireless drivers available an advantage over products for which there are non such drivers available...

Such a competitive advantage exists, but how big it is, depends of course on many other factors. After OpenWrt came Android OS, another Linux kernel-based OS. And Android has become very successful, during Q2 2013, 79.3% of smartphones sold worldwide were running Android.[6]

Smartphones and other current Snapdragon-based products, most probably do profit from long-since mainlined – therefore well-tested and adapted by the ongoing Linux kernel development – device drivers:

- there is a profit simply because of the mere existence of the code. Anything Linux kernel-based, automatically supports these products, extensively and stably.

- additionally, products gain a competitive advantage over products, which do not come with such Linux kernel-mainlined device drivers

When Qualcomm bought Wilocity in July 2014, they not only paid for the company (i.e. the patents it holds and the know-how in the heads of the employees), but knowingly or unknowingly also for the mainlined device drivers for their IEEE 802.11ad (60 GHz) SIP! When Snapdragon 810-based products hit the market in 2015, device drivers for this, will have been well-tested and adapted in the Linux kernel mainline. There will also be some know-how and experience available in the heads of the people having dealt with its device drivers available, so no teething problems when products ship.

Now Snapdragon is the brand for an entire family of SoCs (system on a chip), they combine a gazillion of SIP blocks on the die. Most of the Snapdragon-SIP seems to have support in Linux kernel mainline: at least there is for their Adreno (started by Rob Clark, who was barred from writing such a FOSS driver by NDA for other products... hahaha), for the Atheros IEEE 802.11- and Bluetooth-stuff, and now for the Wilocity 60 GHz-stuff.

2014-07-14 Luc Verhaegen: http://libv.livejournal.com/26635.html

- "Would a free and open-source graphics device driver for Mali give greater value to the shareholders of ARM Holdings?"

- Yes, free drivers would mean greater value to shareholders.

- Original design manufacturer (ODM) satisfaction: open source drivers, when done right and with sufficient support (which is still cheap compared to the binary only route), do severely reduce the integration and legal overhead for device makers and SoC vendors. The ability to report issues directly and openly, and immediately profiting from advances or fixes from the rest of the community does severely reduce the overhead of bringing up new platforms or new devices.

- Determined partners can just hire somebody to implement what they really need.

- Alternative uses of ARM SoCs (like the Raspberry Pi is showing us, and like the OSHW boards from olimex) are becoming more important. These development boards allow a wide array of projects, and will spearhead all new developments, perhaps opening up whole new markets. Binary only drivers currently severely limit the feasibility or practicality of such projects.

- Even Nvidia takes another route with ARM-based Tegra K1. Customers get less overhead by choosing not Mali as GPU.

- "With Broadcom now doing a proper free and open-source graphics device driver for their VideoCore by hiring Eric Anholt, the question really becomes: Does ARMs lack of free drivers hurt shareholder value?"[7]

- ARM is going to try to muscle in on the server market with their 64bit processors. Lack of open source drivers will be holding this platform back, just like it did for AMD back when it bought ATI.

Mr Davies has repeatedly rejected the idea of an open source driver for the Mali, even though my information is that the engineers in his department tend to not agree with him on this.

Competing hardware developers

[edit]Products directly competing with Snapdragon are designed by Broadcom, MediaTek, Samsung, Intel, etc. AFAIK it is next to impossible for such SIP competitors to gain any advantage by studying the source code of the device drivers. Even if one would publish documentation intended to facilitate 3rd parties to write device drivers, like e.g. AMD did for their graphics cards, that would most probably not give the competition any advantage. Documentation required to write device drivers is very different from Hardware description language of the SIP.

So there is a gain, but little loss, if there is a loss at all.

How big this gain is, depends on multiple factors. The most prominent one, but by far not the only one, being market share of other products based on this products. If Linux desktop systems, had a market share of 1%, the gain from market share is small. If Android, a Linux kernel-based OS for mobile systems, achieves 80% market share of products sold in a particular month world-wide, the gain from the market share of the Linux kernel (and the device drivers it contains) is substantial!!!

How to work with the Linux kernel development process

[edit]- See also How to work with the kernel development process by Jonathan Corbet

- The Kernel Report by Jonathan Corbet at LCA2012 on YouTube

| “ | As you can see in these posts, MediaTek Ralink is sending patches for the upstream rt2x00 device driver for their new chipsets, and not just dumping a huge, stand-alone tarball driver on the community, as they have done in the past. This shows a huge willingness to learn how to deal with the kernel community, and they should be strongly encouraged and praised for this major change in attitude. | ” |

| — Greg Kroah-Hartman on 2011-02-09, here | ||

Not just open-source but an open platform

[edit]

Apple's hardware with operating system combo products have been a "walled garden" since for ever. Windows is in the process of becoming one, and its loosing its unique selling proposition and mechanism for vendor lock-in for the gaming market the abstraction APIs Direct3D, XAudio2 and XInput to competing abstraction APIs, such as OpenGL, OpenGL ES, Mantle, SDL, OpenAL, etc.

A walled garden offers a certain range of advantages, especially for its provider, but the disadvantages for its end-users and for Independent software vendors (ISV)) the like outweigh these! A walled garden can compete with other walled gardens, to the disadvantage of its users, but it cannot successfully compete with an established open platform, if the walled gardens lacks certain unique selling propositions. Also, the more walled gardens there are competing with one another, the easier it should be for the open platform to take it all.

Linux distributions are ... not free enough!

[edit]In case somebody would bother to analyze the situation objectively, one would notice, that Linux distributions have several features of walled gardens, see Ingo Molnár's arguments and also the #API versus ABI section here. On the question, why Linux hasn’t been adopted as a mainstream desktop operating system, Ingo Molnár said in March 2012:

| “ | The basic failure of Linux is that it's, perversely, not free enough... | ” |

| — Ingo Molnár, What ails the Linux desktop? Part I. What ails the Linux desktop? Part II. | ||

Shortly, Ingo Molnár suggested, that bothering to maintain 20,000 packages and not bothering to maintain a stable ABI, results in a less free platform, then maintaining only 1000 "core" packages, but bothering to offer a stable ABI, would result in. Offering this kind of freedom, is what has made iOS and Android OS so successful on smartphones!

So why not adopt Android then as desktop OS?

[edit]- Because Android was not designed as a desktop OS! Being aware of that Google try to combine Android with Chrome OS on their Chromebooks...

- KDE Plasma 5 (GNOME 4?) provides a superior workflow (see cognitive ergonomics) on desktop computers, and a comparable workflow on tablets and maybe also on smartphones

- Sandboxing: cgroups/kdbus-based sandboxig will provide serious security in combination with resource management!

- Android's "no GPL or even LGPL in user-space" is scumbag-ish; seeing that OpenWrt uses uClibc and runs quite well on 32MiB of RAM e.g. on the Ben NanoNote[8] makes Google's claims, that libbionic has technical reasons and is not only about the f*cking license, rather questionable. However the problem with libbionic is not the license, but the fact that it does not aim to be compatible with glibc or uclibc and artificially and willingly introduced a serious incompatibility to the Linux kernel-based family of operating systems. So somebody felt obliged to write libhybris to work around this. oFono (the telephony stack adopted by Tizen and Ubuntu Touch) and and BlueZ are both GPLv2 and superior to Android-solutions

- Android is (said to be) short time to market abandon-ware, that is regularly updated. Android is (said to be) not open-source development.

GUIs, graphical shells and windowing systems

[edit]Any GUIs is build with graphical control elements (confusingly named "widgets"). GTK+, Qt, FLTK, GNUstep, wxWidgets, etc, contain graphical control elements and also include a rendering engine, to render the GUI. … and some back-end to a couple of windowing systems. … and maybe more stuff.

The Linux desktop on X11

[edit]- KDE 3 was fully usable desktop environment, as was GNOME 2.x Both were long since replaced.

- Use KDE Plasma 4 on X.Org Server+KWin and the rest of KDE SC 4 and 3rd party applications

- Use GNOME Shell 3.x on Mutter+X.Org Server and the rest of GNOME and 3rd party appications

- Try Cinnamon, Unity, LXDE, Xfce, Pantheon, etc.

The Linux desktop on Wayland

[edit]- KDE Plasma 5 on KWin (includes Wayland & XWayland) with KDE Applications 5 based on KDE Frameworks 5

- GNOME Shell ≥3.14 on Mutter (includes Wayland & XWayland)

- Hawaii

- Enlightenment

- …

The Linux desktop, still (or again) work-in-progress

[edit]- As of July 2014 Wayland is still not complete and being worked on!

- KDE Plasma 5 is out, but still needs to be ported to Wayland

- While Plasma 5.0 was finally released July 2014, GNOME still remains in the re-design phase that started with GNOME 3.0 in February 2011 and will hopefully be done with the release of GNOME 4.0 and GTK+ 4.0, maybe after GNOME 3.16 (April 2015) or GNOME 3.18 (September 2015)! Mutter/GNOME Shell picked up partial Wayland support in 3.12 (April 2014) and hopefully full Wayland support in 3.14 (September 2014).

GNOME 3 and the chubby-finger-friendly GNOME Shell with the chubby-finger-friendly graphical control elements in GTK+ are nice. GNOME Shell, the graphical shell for butterfingers, makes my laser-mouse laugh. People who have "lasers and shit" on their desktop, don't want to run software designed for chubby-fingers.

I conclude, that the Linux desktop, that can successfully compete with OS X and Windows, is NOT DONE YET. It is still a work-in-progress. BUT it is being worked on. Some of the issues aching the Linux desktop, were due to X11. Now, that X11 will finally be replaced with Wayland, all of this issue will vanish and with them excuses to not deliver excellency.

The non-graphical software for Linux has been superior to the OS X and Windows competition since forever. Bash is superior to the Windows PowerShell and there are many more user-support forums for it. Employ Bash with Tilda, Guake, or Yakuake! Compare netfilter, nftables and the fully configurable network scheduler to whatever OS X or Windows have to offer in the networking field.

User interface: comfort level vs productivity

[edit]- The "comfort level" of end-users and developers with the graphical shell and the GUI of programs depends on their "familiarity" with it!

- Their "productivity" using it, depends mainly on them getting used to using it!

This is yet another chicken or the egg-dilemma: to make new users start using GNOME, the GNOME GUI needs to either be very similar to the GUI they have been used to, or needs to be one that is intuitive. If they keep using it, they gain both, familiarity with it and productivity using it. If they put it away, they miss out on both!

Popular cross-platform software such as VLC media player and Mozilla Firefox – two wide-spread non-GNOME programs – ease the pain of switching from Windows to Linux with GNOME or KDE. They have the same key-bindings.

- Any GUI needs to be LTS (long-term-stable), in stark contrast to the entire GNOME 3.x life-cycle.

- Any GUI should be based on some clean conceptual design. E.g. GNOME Shell and GTK+ 3.x do: they primarily target input via chubby-fingers multi-touch-input and output on small tablet screens! Is it possible to target keyboard+mouse on big screens with the same GUI? Cocoa Touch and Ubuntu Touch and KDE Plasma 4 and KDE Plasma 5 and Cinnamon all say NOOOOO, it is not possible! There should be two distinct graphical shells, one for touch-input and tablet screen sizes, and another one for keyboard+mouse and big screen sizes.

The kind of end-user, that hardly cares about what sub-systems there are under the hood, or even how they interact with one another, is most probably not going to run GNOME 3.x for a long time and he won't be the early adopter of GNOME 4.0. I have been running "chubby-fingers" GNOME 3.x with laser mouse on a big screen, mainly because I know a bit about the stuff under the hood, and I am looking forward for GNOME 4.x/GTK+ 4.x!

I could imagine similar be true for other users of GNOME 3.0 to 3.14. The problem I have with that, is that it further delays the global dominance of Linux kernel-based operating systems.

Sound system on Linux

[edit]- Professional audio and desktop audio on Linux are currently two completely separate worlds. The only common infrastructure seems to be ALSA, but in different configuration (no dmix!). Apple has shown with Core Audio that a unified sound system for both desktop and professional use is achievable. How well is Core Audio suited for developing and playing video games?

- Professional audio on Linux has a low-latency kernel, well defined, accepted APIs for pro audio, such as JACK Audio Connection Kit for interconnection or LADSPA/LV2 for plugins

- JACK is a sound server targeting professional audio

| “ | PulseAudio is a sound server that plays a major role in the non-professional Linux audio system. It provides basic mixing functionality, per-application volumes, moving streams between devices during playback, positional event sounds (i.e. click on the left side of the screen, have the sound event come out through the left speakers), secure session-switching support, monitoring of sound playback levels, rescuing playback streams to other audio devices on hot unplug, automatic hotplug configuration, automatic up/downmixing stereo/surround, high-quality re-sampling, network transparency, sound effects, simultaneous output to multiple sound devices are all features PA provides right now, and what you don't get without it. It also provides the infrastructure for upcoming features like volume-follows-focus, automatic attenuation of music on signal on VoIP stream, UPnP media renderer support, Apple RAOP support, Sound server support, mixing/volume adjustments with dynamic range compression, adaptive volume of event sounds based on the volume of music streams, jack sensing, switching between stereo/surround/spdif during runtime | ” |

| — Lennart Poettering in June 2008, PulseAudio FUD | ||

| “ | Many people (like Jeffrey) wonder why have software mixing at all if you have hardware mixing? The thing is, hardware mixing is a thing of the past, modern sound cards don't do mixing in hardware anymore. Precisely for doing things like mixing in software SIMD CPU extensions like SSE have been invented. Modern sound cards these days are kind of "dumbed" down, high-quality (componentry with high SNR) DACs. They don't do mixing anymore, many modern chips don't even do volume control anymore. Remember the days where having a Wavetable chip, like e.g. Yamaha YM3812 or the Ensoniq ES-5506 OTTO, the was a killer feature of a sound card? Those days are gone, today wavetable synthesizing is done almost exclusively in software -- and that's exactly what happened to hardware mixing too. And it is good that way. In software mixing is is much easier to do fancier stuff like DRC which will increase quality of mixing. And modern CPUs provide all the necessary SIMD command sets to implement this efficiently. | ” |

| — Lennart Poettering in June 2008, PulseAudio FUD | ||

- At the Audio MC one thing became very clear: it is very difficult for programmers to figure out which audio API to use for which purpose and which API not to use when doing audio programming on Linux. From June 2008: A Guide Through The Linux Sound API Jungle with Wikipedia links:

- I want to write a media-player-like application!

- GStreamer is used e.g. by Category:Software that uses GStreamer

- I want to add event sounds to my application!

- Use libcanberra, install your sound files according to the XDG Sound Theme and Naming Specifications!!! libcanberra is an abstraction API for desktop event sounds available on top of libALSA and on top of PulseAudio. libcanberra is a total replacement for the "PulseAudio sample cache API" and libsydney is a total replacement for the "PulseAudio streaming API";

- I want to do professional audio programming, hard-disk recording, music synthesizing, MIDI interfacing!

- I want to do basic PCM audio playback/capturing!

- Use the safe ALSA subset.

- I want to add sound to my game!

- Use the Audio API of SDL for full-screen games, libcanberra for simple games with standard UIs such as GTK+.

- I want to write a mixer application!

- Use the layer you want to support directly: if you want to support enhanced desktop software mixers, use the PulseAudio volume control APIs. If you want to support hardware mixers, use the ALSA mixer APIs.

- I want to write audio software for the plumbing layer!

- Use the full ALSA stack.

- I want to write audio software for embedded applications!

- For technical appliances usually the safe ALSA subset is a good choice, this however depends highly on your use-case.

- Abstraction APIs for video game development: Audio API of SDL, OpenAL, PortAudio, libao, CSL (http://fastlabinc.com/CSL/), libsydney libsydney intro, libsydney git

"ALSA is the Linux API for doing PCM playback and recording. ALSA is very focused on hardware devices, although other backends are supported as well (to a limit degree, see below). ALSA as a name is used both for the Linux audio kernel drivers and a user-space library libALSA that wraps these. libALSA is comprehensive, and portable (to a limited degree). The full ALSA API can appear very complex and is large. However it supports almost everything modern sound hardware can provide. Some of the functionality of the ALSA API is limited in its use to actual hardware devices supported by the Linux kernel (in contrast to software sound servers and sound drivers implemented in user-space such as those for Bluetooth and FireWire audio -- among others) and Linux specific drivers"

Linux as a gaming platform

[edit]

- A stable Linux ABI would be nice, so games don't just run on Ubuntu (coerces Unity) or just on Debian or just on SteamOS.

- a well designed rendering API (OpenGL, Mantle?) and drivers implementing this; Nvidia looks good on Linux! AMD struggles with quality, Intel and Mesa 3D with the supported OpenGL version.

- Note that DirectX comprises Direct3D for rendering, XAudio2 for sound and XInput for input. I am not sure whether OpenGL + Simple DirectMedia Layer is at least as good as DirectX 11/12!

Choosing between portability and innovation

[edit]- Also see Choosing between portability and innovation on LWN.net from March 2, 2011

The BDSs don't mind, if they code gets adopted by 3rd parties, e.g. at least XNU, JunOS, PlayStation 3 OS or PlayStation 4 OS, maybe more. It is unknown to me, how much Apple, Juniper Networks or Sony contributed back to the BSD communities for adopting their code. Maybe some of the programmers got a consulting job, but the programmers who didn't and the community, i.e. the people who test the software, write bug reports and maybe patches, what did they get back? (... so what's the point of the BSD Licenses?)

In case the BSD communities don't mind corporations adopting their code and then contributing nothing back, that is fine with me. They are all grown-up people, who know what they are doing and they all willingly chose those non-copyleft licenses. What bothers me is though, when the same people, try to keep Linux kernel-based operating systems from advancing. The licenses are legally compatible, they can simply take the GPL'ed code and adopt it. They don't want to, they want only BSD-licensed code. Why? Seriously because it is "more free"? What is the benefit for most of the end-users?

- If the GPL is not "free enough", that is THEIR problem (well, and the problem of 3rd parties who would like to just take all that well-tested code, develop it further as proprietary software, and then compete with them...).

- If they don't have enough developers to keep up with the development of the Linux kernel, that is THEIR problem. The Linux kernel, even though or maybe because it is under the "less free" GPLv2, has gained very wide adoption in the industry. I am little interested in most of what come out of Richard Stallman's mouth, and even less interested in the "less free"/"more free" arguments, which are just slogans, that thwart the marketing blunders, that come out of Richard Stallman's mouth.

- Example: NetBSD 7.0 Will Finally Have DRM/KMS Drivers = DRM/KMS driver have been ported several times independently to the BSDs. Seems like they don't cooperate too much among themselves.

Looking at game theory and human behavior as well as business models for free and open-source software, there seem to be a preference, even an advantage for kernels under the GPL, device drivers under the MIT License, and user-space software under various licenses including proprietary software. Id software GPL'ed their game engines but kept the entire game data proprietary, no wonder looking at the man-hours necessary to create that data, level design, meshes, textures and sounds.

In this article-wanna-be I tried to present some technical reasons, why Linux is not so wide spread on desktop computers. Some people™ were grumpy after udev got into the Linux kernel, and user-space software started to make use of it. Again, some people™ were grumpy that systemd or Weston are Linux-only. Some people™ were grumpy, when GNOME discussed using logind. Should kdbus some day make it into the kernel, again, some people™ will be grumpy...

The real competition for Linux, are Microsoft Windows, OS X & iOS and maybe even Oracle Solaris! Neither OpenSolaris nor the BSDs are competitors.

- Competition about money for development (most of the 6,000? professional Linux kernel developers are payed by the industry to push its development on!)

- competition about users write bug reports, users who write patches, users who test test test

- competition about users active in diverse forums, providing some support

- competition about installed-base, so hardware vendors have an incentive to provide (proprietary OR open-source) device drivers. (Nvidia's proprietary graphics device drivers are GOOD for their customers, i.e. for end-users, even if Nvidia are criticized for their stance; Atheros' FOSS drivers are obviously even better for them and for their customers, probably their success made Broadcom finally provide FOSS drivers as well. More hardware vendors could follow...)

In case we are too considerate of the BSDs or OpenSolaris, we may loose points to these actual competitors. So let's continue to cooperate with the BSDs and with OpenSolaris, without being to considerate of their much smaller user-bases and license issues and whatever other problems they may have.

- Apple could have adopted the Linux kernel instead of XNU. They didn't, they adopted FreeBSD. With their Quartz and Cocoa and bla they would still have had decisive advantages over the shoddy X11. While XNU is kept open-source, Darwin (operating system) uses it, I understand it does not work well without the device drivers, which are all proprietary. Good news, Linux, Mesa 3D and SteamOS made it without them, but it took a whole while longer

- Sun could have chosen the GPL/LGPL/MIT License/BSD License, instead they wrote their own Common Development and Distribution License (CDDL) from scratch and wrote it to be incompatible with the GPL on purpose. Back then, Sun competed with Solaris against Linux. Putting Solaris under the GPL, would have of course benefited both. I guess they were afraid, that the Linux kernel having more resources, it would have gained much more from such a move. A comprehensible strategic decision from the stand-point of fierce competition. Now Sun ain't no more, and Oracle rather makes money by directly competing with Red Hat by adopting a lot of RHEL-code while contributing only little code back, even though Linux kernel is under GPL. I wonder how a market would look with non-copyleft licenses... really better for the end-customer? Without CDDL, we would have had ZFS as Linux kernel module back in 2008 then instead of in the year 2015 and maybe some other nice stuff, forearming Linux better against the real competition.

- Microsoft further collects unique selling propositions:

- Category:Windows-only software: Microsoft Visual Studio, Autodesk 3ds Max, CATIA, Creo Elements/Pro, (remember how CAD companies kept OpenGL hostage for a decade? For Linux there is only OpenGL, while for Microsoft Windows there additionally is Direct3D, and now – thanks to AMD – also Mantle .

- Hell, there even is a lot of Category:Windows-only free software! But in case netsniff-ng, systemd or Weston are Linux-only to gain a technical advantage not a political one, some people™ make it stink... well, what are their actual arguments? How many people really care, if something also runs on the BSDs or on OpenSolaris?

- Blu-Ray: AFAIK, as of June 2014, there is NO proprietary software I can purchase for money, to watch a Blu-Ray on Linux. And this has nothing to do with what comes out of Richards Stallman's mouth. It's Hollywood: they want me to rip their stuff after buying it, before I can comfortably watch it on Linux. ;-)

- Netflix: I don't care, but if I did, I would have to jump trough some hoops to make it accessible from Linux; Why? Is the installed-base considered to low?

- Standards and APIs: DirectX video games, and of course Microsoft's other cash cow: MS Office. They authored the "Office Open XML"-specification, they got accepted as a "standard", and look how diligent some Wikipedia contributors have been: List of software that supports Office Open XML. There is no such page for the standard OpenDocument... Bother to write one, and some of the shit-heads will put stones in your way...

I don't dislike the BSD people, and I do not know, whether it is really them who make it stink when another software is written Linux-only. I don't care about them. I care about Linux kernel-based stuff, and look at the actual competition. And looking at the unique selling propositions available for Windows and at all the Category:Windows-only free software, maybe more Linux-only software wouldn't be that bad for Linux's stance against this competition. Don't write Linux-only software for political reasons, write it for technical reasons. E.g. there are very good and very sane technical reasons, to base GNOME on logind. And it is the BSDs, who stand in the way. Being too considerate of their problems, keeps us back.

Not adopting logind

- leaves good code unused

- prevents users from actually testing that code, because it is less used...

- increases the burden of the GNOME developers,

- lessens the "user experience" when using GNOME on Linux

The reasons for not adopting logind ASAP, are all political not technical. Can Linux really afford this?

Currently I am running GNOME 3 with GNOME Shell, even though it has been designed by chubby finger designers for chubby finger users. It has not increased my productivity. Especially GNOME Contacts, see File:GNOME Contacts 3.11.92.png has an absolutely brain-dead design, I'd rather see all fields and use the Tab ↹ to switch between them, than use that chubby fingers™-design to enter data. I use it, because I want Wayland and logind and all the other stuff, that is not available on GTK+2-based DEs. I use it, because there is no good alternative. The Cinnamon-Design is what I rather want, but they don't keep up with GTK+/GLib development.

GNOME should have adopted KDE's decision to provide KDE Plasma Workspaces. Is it really lack of manpower the reasons they did not, or was it something else?

Online distribution

[edit]Apple's actual dray-horse is their online distribution software for the iOS: App Store; App Store makes it fairly easy to monetize programming efforts, even books. All the software developers who do lack of better software distribution, might swallow Apple's proprietary Metal API along the way. The Metal API augments the walled garden-features of iOS with vendor lock-in. Looking at the crappy OpenGL implementation on OS X, even serious video game developers might swallow Metal, convince yourselves: Ubuntu With Linux 3.16 Smashes OS X 10.9.4 On The MacBook Air. Back in the day, Microsoft spread FUD about the support for OpenGL in Windows Vista with the intention to weaken OpenGL, see Why you should use OpenGL and not Direct3D!

- Google Play is the most successful competitor: File:World Wide Smartphone Sales.png, File:World Wide Smartphone Sales Share.png. Google Play is Android-only, it is not available for Debian, Fedora or SteamOS.

Do we need more successful online distribution platforms? More competition will definitely not hurt the end-user! And this is another point: what is there for plain Linux? AFAIK, there is

Instead of trying to compete with Red Hat (the systemd vs. Upstart-crap in Debian, Mir (software) vs. Wayland, etc. Canonical SHOULD HAVE concentrated on developing and establishing such another online distribution platform! Sadly they rather play stupid useless crap.

Reference

[edit]- ^ "ControlGroupInterface". freedesktop.org.

- ^ "libevdev". freedesktop.org.

- ^ "Android Nears 80% Market Share In Global Smartphone Shipments".

- ^ "Ubuntu Linux Gaming Performance Mostly On Par With Windows 8.1". Phoronix. 2013-10-23.

- ^ Apple Inc. - UNIX 03 Register of Certified Products, The Open Group

- ^ "Android Nears 80% Market Share In Global Smartphone Shipments".

- ^ "Would a free and open-source graphics device driver for Mali give greater value to the shareholders of ARM Holdings?". AnandTech. 2014-07-04.

- ^ {cite web |url=http://wiki.openwrt.org/doc/techref/architecture |title=comparing OpenWrt to Android and Tizen}}