User:Homayoun mh/sandbox

Functional (mathematics)

[edit]Local vs non-local

[edit]If a functional's value can be computed for small segments of the input curve and then summed to find the total value, a function is called local. Otherwise it is called non-local. For example:

is local while

is non-local. This occurs commonly when integrals occur separately in the numerator and denominator of an equation such as in calculations of center of mass.

Linear functionals

[edit]Linear functionals first appeared in functional analysis, the study of vector spaces of functions. A typical example of a linear functional is integration: the linear transformation defined by the Riemann integral

is a linear functional from the vector space C[a,b] of continuous functions on the interval [a, b] to the real numbers. The linearity of I(f) follows from the standard facts about the integral:

Functional derivative

[edit]The functional derivative is defined first; Then the functional differential is defined in terms of the functional derivative.

Functional derivative

[edit]Given a manifold M representing (continuous/smooth/with certain boundary conditions/etc.) functions ρ and a functional F defined as

the functional derivative of F[ρ], denoted δF/δρ, is defined by[1]

where is an arbitrary function. is called the variation of ρ.

Functional differential

[edit]The differential (or variation or first variation) of the functional F[ρ] is,[2] [Note 1]

where δρ(x) = εϕ(x) is the variation of ρ(x).[clarification needed] This is similar in form to the total differential of a function F(ρ1, ρ2, ..., ρn),

where ρ1, ρ2, ... , ρn are independent variables. Comparing the last two equations, the functional derivative δF/δρ(x) has a role similar to that of the partial derivative ∂F/∂ρi , where the variable of integration x is like a continuous version of the summation index i.[3]

Properties

[edit]Like the derivative of a function, the functional derivative satisfies the following properties, where F[ρ] and G[ρ] are functionals:

- Linear:[4]

- Product rule:[5]

- Chain rules:

Lemmas

[edit]

where ρ = ρ(r) and f = f (r, ρ, ∇ρ). This formula is for the case of the functional form given by F[ρ] at the beginning of this section. For other functional forms, the definition of the functional derivative can be used as the starting point for its determination. (See the example Coulomb potential energy functional.)

Proof: given a functional

and a function ϕ(r) that vanishes on the boundary of the region of integration, from a previous section Definition,

The second line is obtained using the total derivative, where ∂f /∂∇ρ is a derivative of a scalar with respect to a vector.[Note 2] The third line was obtained by use of a product rule for divergence. The fourth line was obtained using the divergence theorem and the condition that ϕ=0 on the boundary of the region of integration. Since ϕ is also an arbitrary function, applying the fundamental lemma of calculus of variations to the last line, the functional derivative is obtained.

Examples

[edit]Thomas–Fermi kinetic energy functional

[edit]The Thomas–Fermi model of 1927 used a kinetic energy functional for a noninteracting uniform electron gas in a first attempt of density-functional theory of electronic structure:

Since the integrand of TTF[ρ] does not involve derivatives of ρ(r), the functional derivative of TTF[ρ] is,[8]

Coulomb potential energy functional

[edit]For the electron-nucleus potential, Thomas and Fermi employed the Coulomb potential energy functional

Applying the definition of functional derivative,

So,

For the classical part of the electron-electron interaction, Thomas and Fermi employed the Coulomb potential energy functional

From the definition of the functional derivative,

The first and second terms on the right hand side of the last equation are equal, since r and r′ in the second term can be interchanged without changing the value of the integral. Therefore,

and the functional derivative of the electron-electron coulomb potential energy functional J[ρ] is,[9]

The second functional derivative is

Weizsäcker kinetic energy functional

[edit]In 1935 von Weizsäcker proposed to add a gradient correction to the Thomas-Fermi kinetic energy functional to make it suit better a molecular electron cloud:

where

Using a previously derived formula for the functional derivative,

and the result is,[10]

Entropy

[edit]The entropy of a discrete random variable is a functional of the probability mass function.

Thus,

Thus,

Exponential

[edit]Let

Using the delta function as a test function,

Thus,

This is particularly useful in calculating the correlation functions from the partition function in quantum field theory.

Functional derivative of a function

[edit]A function can be written in the form of an integral like a functional. For example,

Since the integrand does not depend on derivatives of ρ, the functional derivative of ρ(r) is,

Application in calculus of variations

[edit]Consider the functional

where f ′(x) ≡ df/dx. If f is varied by adding to it a function δf, and the resulting integrand L(x, f +δf, f '+δf ′) is expanded in powers of δf, then the change in the value of J to first order in δf can be expressed as follows:[11][Note 3]

The coefficient of δf(x), denoted as δJ/δf(x), is called the functional derivative of J with respect to f at the point x.[3] For this example functional, the functional derivative is the left hand side of the Euler-Lagrange equation,[12]

Using the delta function as a test function

[edit]In physics, it's common to use the Dirac delta function in place of a generic test function , for yielding the functional derivative at the point (this is a point of the whole functional derivative as a partial derivative is a component of the gradient):

This works in cases when formally can be expanded as a series (or at least up to first order) in . The formula is however not mathematically rigorous, since is usually not even defined.

The definition given in a previous section is based on a relationship that holds for all test functions ϕ, so one might think that it should hold also when ϕ is chosen to be a specific function such as the delta function.

Notes

[edit]- ^ Called differential in (Parr & Yang 1989, p. 246), variation or first variation in (Courant & Hilbert 1953, p. 186), and variation or differential in (Gelfand & Fomin 2000, p. 11, § 3.2).

- ^ For a three-dimensional cartesian coordinate system,

- ^ According to Giaquinta & Hildebrandt (1996, p. 18), this notation is customary in physical literature.

Category:Differential calculus

Category:Topological vector spaces Category:Differential operators Category:Calculus of variations Category:Variational analysis* Category:Types of functions

Thomas–Fermi model

[edit]The predecessor to density functional theory was the Thomas–Fermi model, developed independently by both Thomas and Fermi in 1927. They used a statistical model to approximate the distribution of electrons in an atom. The mathematical basis postulated that electrons are distributed uniformly in phase space with two electrons in every of volume.[13] For each element of coordinate space volume we can fill out a sphere of momentum space up to the Fermi momentum [14]

Kinetic energy

[edit]For a small volume element ΔV, and for the atom in its ground state, we can fill out a spherical momentum space volume Vf up to the Fermi momentum pf , and thus,[15]

where is a point in ΔV.

The corresponding phase space volume is

The electrons in ΔVph are distributed uniformly with two electrons per h3 of this phase space volume, where h is Planck's constant.[16] Then the number of electrons in ΔVph is

The number of electrons in ΔV is

where is the electron density.

The fraction of electrons at that have momentum between p and p+dp is,

Using the classical expression for the kinetic energy of an electron with mass me, the kinetic energy per unit volume at for the electrons of the atom is,

where a previous expression relating to has been used and,

Integrating the kinetic energy per unit volume over all space, results in the total kinetic energy of the electrons,[17]

This result shows that the total kinetic energy of the electrons can be expressed in terms of only the spatially varying electron density according to the Thomas–Fermi model. As such, they were able to calculate the energy of an atom using this expression for the kinetic energy combined with the classical expressions for the nuclear-electron and electron-electron interactions (which can both also be represented in terms of the electron density).

Potential energies

[edit]The potential energy of an atom's electrons, due to the electric attraction of the positively charged nucleus is,

where is the potential energy of an electron at that is due to the electric field of the nucleus. For the case of a nucleus centered at with charge Ze, where Z is a positive integer and e is the elementary charge,

The potential energy of the electrons due to their mutual electric repulsion is,

Total energy

[edit]The total energy of the electrons is the sum of their kinetic and potential energies,[18]

Inaccuracies and improvements

[edit]Although this was an important first step, the Thomas–Fermi equation's accuracy is limited because the resulting expression for the kinetic energy is only approximate, and because the method does not attempt to represent the exchange energy of an atom as a conclusion of the Pauli principle. A term for the exchange energy was added by Dirac in 1928.

However, the Thomas–Fermi–Dirac theory remained rather inaccurate for most applications. The largest source of error was in the representation of the kinetic energy, followed by the errors in the exchange energy, and due to the complete neglect of electron correlation.

In 1962, Edward Teller showed that Thomas–Fermi theory cannot describe molecular bonding – the energy of any molecule calculated with TF theory is higher than the sum of the energies of the constituent atoms. More generally, the total energy of a molecule decreases when the bond lengths are uniformly increased.[19][20][21][22] This can be overcome by improving the expression for the kinetic energy.[23]

The Thomas–Fermi kinetic energy can be improved by adding to it the Weizsäcker (1935) correction:,[24] which can then make a much improved Thomas–Fermi–Dirac–Weizsaecher density functional theory (TFDW-DFT), which would be equivalent to the Hartree and then Hartree–Fock mean field theories which do not treat static electron correlation (treated by the CASSCF theory developed by Bjorn Roos' group in Lund, Sweden), and dynamic correlation (treated by both Moeller–Plesset perturbation theory to second order (MP2) or CASPT2, the extension of MP2 theory to systems not well treated by simple single reference/configuration methods like Hartree–Fock theory and Kohn–Sham DFT. Note that KS-DFT has also been extended to treat systems for which the ground electronic state is not well represented by either a single Slater determinant of Hartree–Fock or "Kohn–Sham" orbitals, the so-called CAS-DFT method, also being developed in the group of Bjorn Roos in Lund.

Category:Atomic physics

Category:Density functional theory

Pauli exclusion principle, Connection to quantum state symmetry

[edit]The Pauli exclusion principle with a single-valued many-particle wavefunction is equivalent to requiring the wavefunction to be antisymmetric. An antisymmetric two-particle state is represented as a sum of states in which one particle is in state and the other in state :

and antisymmetry under exchange means that

This implies A(x,y) = 0 when x=y, which is Pauli exclusion. It is true in any basis, since unitary changes of basis keep antisymmetric matrices antisymmetric, although strictly speaking, the quantity A(x,y) is not a matrix but an antisymmetric rank-two tensor.

Conversely, if the diagonal quantities A(x,x) are zero in every basis, then the wavefunction component:

is necessarily antisymmetric.

Quantum mechanical description of identical particles

[edit]Symmetrical and anti-symmetrical states

[edit]

Let us define a linear operator P, called the exchange operator. When it acts on a tensor product of two state vectors, it exchanges the values of the state vectors:

P is both Hermitian and unitary. Because it is unitary, we can regard it as a symmetry operator. We can describe this symmetry as the symmetry under the exchange of labels attached to the particles (i.e., to the single-particle Hilbert spaces).

Clearly, (the identity operator), so the eigenvalues of P are +1 and −1. The corresponding eigenvectors are the symmetric and antisymmetric states:

In other words, symmetric and antisymmetric states are essentially unchanged under the exchange of particle labels: they are only multiplied by a factor of +1 or −1, rather than being "rotated" somewhere else in the Hilbert space. This indicates that the particle labels have no physical meaning, in agreement with our earlier discussion on indistinguishability.

We have mentioned that P is Hermitian. As a result, it can be regarded as an observable of the system, which means that we can, in principle, perform a measurement to find out if a state is symmetric or antisymmetric. Furthermore, the equivalence of the particles indicates that the Hamiltonian can be written in a symmetrical form, such as

It is possible to show that such Hamiltonians satisfy the commutation relation

According to the Heisenberg equation, this means that the value of P is a constant of motion. If the quantum state is initially symmetric (antisymmetric), it will remain symmetric (antisymmetric) as the system evolves. Mathematically, this says that the state vector is confined to one of the two eigenspaces of P, and is not allowed to range over the entire Hilbert space. Thus, we might as well treat that eigenspace as the actual Hilbert space of the system. This is the idea behind the definition of Fock space.

We will now make the above discussion concrete, using the formalism developed in the article on the mathematical formulation of quantum mechanics.

Let n denote a complete set of (discrete) quantum numbers for specifying single-particle states (for example, for the particle in a box problem we can take n to be the quantized wave vector of the wavefunction.) For simplicity, consider a system composed of two identical particles. Suppose that one particle is in the state n1, and another is in the state n2. What is the quantum state of the system? Intuitively, it should be

which is simply the canonical way of constructing a basis for a tensor product space of the combined system from the individual spaces. However, this expression implies the ability to identify the particle with n1 as "particle 1" and the particle with n2 as "particle 2". If the particles are indistinguishable, this is impossible by definition; either particle can be in either state. It turns out that we must have:[25] [clarification needed]

to see this, imagine a two identical particle system. suppose we know that one of the particles is in state and the other is in state . prior to the measurement, there is no way to know if particle 1 is in state and particle 2 is in state , or the other way around because the particles are indistinguishable. and so, there are equal probabilities for each of the states to occur - meaning that the system is in superposition of both states prior to the measurement.

States where this is a sum are known as symmetric; states involving the difference are called antisymmetric. More completely, symmetric states have the form

while antisymmetric states have the form

Note that if n1 and n2 are the same, the antisymmetric expression gives zero, which cannot be a state vector as it cannot be normalized. In other words, in an antisymmetric state two identical particles cannot occupy the same single-particle states. This is known as the Pauli exclusion principle, and it is the fundamental reason behind the chemical properties of atoms and the stability of matter.

Exchange symmetry

[edit]The importance of symmetric and antisymmetric states is ultimately based on empirical evidence. It appears to be a fact of nature that identical particles do not occupy states of a mixed symmetry, such as

There is actually an exception to this rule, which we will discuss later. On the other hand, we can show that the symmetric and antisymmetric states are in a sense special, by examining a particular symmetry of the multiple-particle states known as exchange symmetry.

N particles

[edit]The above discussion generalizes readily to the case of N particles. Suppose we have N particles with quantum numbers n1, n2, ..., nN. If the particles are bosons, they occupy a totally symmetric state, which is symmetric under the exchange of any two particle labels:

Here, the sum is taken over all different states under permutation p of the N elements. The square root left to the sum is a normalizing constant. The quantity nj stands for the number of times each of the single-particle states appears in the N-particle state. In the following matrix each row represents one permutation of N elements.

If we choose the first row as a reference, the next rows, imply one permutation, the next rows imply two permutations, and so on. So the number of rows with k permutations with regard to the first row would be .

In the same vein, fermions occupy totally antisymmetric states:

Here, is the signature of each permutation (i.e. if is composed of an even number of transpositions, and if odd.) Note that we have omitted the term, because each single-particle state can appear only once in a fermionic state. Otherwise the sum would again be zero due to the antisymmetry, thus representing a physically impossible state. This is the Pauli exclusion principle for many particles.

These states have been normalized so that

Measurements of identical particles

[edit]Suppose we have a system of N bosons (fermions) in the symmetric (antisymmetric) state

and we perform a measurement of some other set of discrete observables, m. In general, this would yield some result m1 for one particle, m2 for another particle, and so forth. If the particles are bosons (fermions), the state after the measurement must remain symmetric (antisymmetric), i.e.

The probability of obtaining a particular result for the m measurement is

We can show that

which verifies that the total probability is 1. Note that we have to restrict the sum to ordered values of m1, ..., mN to ensure that we do not count each multi-particle state more than once.

Wavefunction representation

[edit]So far, we have worked with discrete observables. We will now extend the discussion to continuous observables, such as the position x.

Recall that an eigenstate of a continuous observable represents an infinitesimal range of values of the observable, not a single value as with discrete observables. For instance, if a particle is in a state |ψ⟩, the probability of finding it in a region of volume d3x surrounding some position x is

As a result, the continuous eigenstates |x⟩ are normalized to the delta function instead of unity:

We can construct symmetric and antisymmetric multi-particle states out of continuous eigenstates in the same way as before. However, it is customary to use a different normalizing constant:

We can then write a many-body wavefunction,

where the single-particle wavefunctions are defined, as usual, by

The most important property of these wavefunctions is that exchanging any two of the coordinate variables changes the wavefunction by only a plus or minus sign. This is the manifestation of symmetry and antisymmetry in the wavefunction representation:

The many-body wavefunction has the following significance: if the system is initially in a state with quantum numbers n1, ..., nN, and we perform a position measurement, the probability of finding particles in infinitesimal volumes near x1, x2, ..., xN is

The factor of N! comes from our normalizing constant, which has been chosen so that, by analogy with single-particle wavefunctions,

Because each integral runs over all possible values of x, each multi-particle state appears N! times in the integral. In other words, the probability associated with each event is evenly distributed across N! equivalent points in the integral space. Because it is usually more convenient to work with unrestricted integrals than restricted ones, we have chosen our normalizing constant to reflect this.

Finally, it is interesting to note that antisymmetric wavefunction can be written as the determinant of a matrix, known as a Slater determinant:

Hartree-Fock (HF)

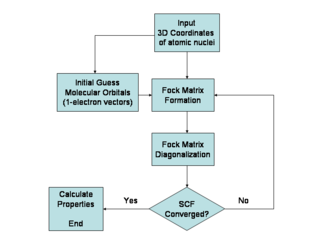

[edit]Hartree–Fock algorithm

[edit]The Hartree–Fock method is typically used to solve the time-independent Schrödinger equation for a multi-electron atom or molecule as described in the w:Born–Oppenheimer approximation. Since there are no known solutions for many-electron systems (hydrogenic atoms and the diatomic hydrogen cation being notable one-electron exceptions), the problem is solved numerically. Due to the nonlinearities introduced by the Hartree–Fock approximation, the equations are solved using a nonlinear method such as w:iteration, which gives rise to the name "self-consistent field method."

Approximations

[edit]The Hartree–Fock method makes five major simplifications in order to deal with this task:

- The w:Born–Oppenheimer approximation is inherently assumed. The full molecular wave function is actually a function of the coordinates of each of the nuclei, in addition to those of the electrons.

- Typically, relativistic effects are completely neglected. The momentum operator is assumed to be completely non-relativistic.

- The variational solution is assumed to be a w:linear combination of a finite number of basis functions, which are usually (but not always) chosen to be w:orthogonal. The finite basis set is assumed to be approximately complete.

- Each w:energy eigenfunction is assumed to be describable by a single w:Slater determinant, an antisymmetrized product of one-electron wave functions (i.e., orbitals).

- The mean field approximation is implied. Effects arising from deviations from this assumption, known as w:electron correlation, are completely neglected for the electrons of opposite spin, but are taken into account for electrons of parallel spin.[26][27] (Electron correlation should not be confused with electron exchange, which is fully accounted for in the Hartree–Fock method.)[27]

Relaxation of the last two approximations give rise to many so-called w:post-Hartree–Fock methods.

The Fock operator

[edit]Because the electron-electron repulsion term of the w:electronic molecular Hamiltonian involves the coordinates of two different electrons, it is necessary to reformulate it in an approximate way. Under this approximation, (outlined under Hartree–Fock algorithm), all of the terms of the exact Hamiltonian except the nuclear-nuclear repulsion term are re-expressed as the sum of one-electron operators outlined below, for closed-shell atoms or molecules (with two electrons in each spatial orbital).[28] The "(1)" following each operator symbol simply indicates that the operator is 1-electron in nature.

where is the one-electron Fock operator generated by the orbitals , and

is the one-electron core Hamiltonian. Also is the w:Coulomb operator,

defining the electron-electron repulsion energy due to each of the two electrons in the jth orbital.[28]

Finally is the w:exchange operator, defining the electron exchange energy due to the antisymmetry of the total n-electron wave function. [28]

This (so called) "exchange energy" operator, K, is simply an artifact of the Slater determinant.

Finding the Hartree–Fock one-electron wave functions is now equivalent to solving the eigenfunction equation:

where are a set of one-electron wave functions, called the Hartree–Fock molecular orbitals.

Fock matrix

[edit]In the w:Hartree–Fock method of w:quantum mechanics, the Fock matrix is a matrix approximating the single-electron w:energy operator of a given quantum system in a given set of basis vectors.[29]

It is most often formed in w:computational chemistry when attempting to solve the w:Roothaan equations for an atomic or molecular system. The Fock matrix is actually an approximation to the true Hamiltonian operator of the quantum system. It includes the effects of electron-electron repulsion only in an average way. Importantly, because the Fock operator is a one-electron operator, it does not include the w:electron correlation energy.

The Fock matrix is defined by the Fock operator. For the restricted case which assumes w:closed-shell orbitals and single-determinantal wavefunctions, the Fock operator for the i-th electron is given by:[30]

where:

- is the Fock operator for the i-th electron in the system,

- is the w:one-electron hamiltonian for the i-th electron,

- is the number of electrons and is the number of occupied orbitals in the closed-shell system,

- is the w:Coulomb operator, defining the repulsive force between the j-th and i-th electrons in the system,

- is the w:exchange operator, defining the quantum effect produced by exchanging two electrons.

The Coulomb operator is multiplied by two since there are two electrons in each occupied orbital. The exchange operator is not multiplied by two since it has a non-zero result only for electrons which have the same spin as the i-th electron.

For systems with unpaired electrons there are many choices of Fock matrices.

Linear combination of atomic orbitals

[edit]Typically, in modern Hartree–Fock calculations, the one-electron wave functions are approximated by a w:linear combination of atomic orbitals. These atomic orbitals are called w:Slater-type orbitals. Furthermore, it is very common for the "atomic orbitals" in use to actually be composed of a linear combination of one or more Gaussian-type orbitals, rather than Slater-type orbitals, in the interests of saving large amounts of computation time.

Various basis sets are used in practice, most of which are composed of Gaussian functions. In some applications, an orthogonalization method such as the w:Gram–Schmidt process is performed in order to produce a set of orthogonal basis functions. This can in principle save computational time when the computer is solving the Roothaan–Hall equations by converting the w:overlap matrix effectively to an w:identity matrix. However, in most modern computer programs for molecular Hartree–Fock calculations this procedure is not followed due to the high numerical cost of orthogonalization and the advent of more efficient, often sparse, algorithms for solving the w:generalized eigenvalue problem, of which the Roothaan–Hall equations are an example.

DFT Derivation and formalism

[edit]As usual in many-body electronic structure calculations, the nuclei of the treated molecules or clusters are seen as fixed (the Born–Oppenheimer approximation), generating a static external potential V in which the electrons are moving. A stationary electronic state is then described by a wavefunction satisfying the many-electron time-independent Schrödinger equation

where, for the -electron system, is the Hamiltonian, is the total energy, is the kinetic energy, is the potential energy from the external field due to positively charged nuclei, and is the electron-electron interaction energy. The operators and are called universal operators as they are the same for any -electron system, while is system dependent. This complicated many-particle equation is not separable into simpler single-particle equations because of the interaction term .

There are many sophisticated methods for solving the many-body Schrödinger equation based on the expansion of the wavefunction in Slater determinants. While the simplest one is the Hartree–Fock method, more sophisticated approaches are usually categorized as post-Hartree–Fock methods. However, the problem with these methods is the huge computational effort, which makes it virtually impossible to apply them efficiently to larger, more complex systems.

Here DFT provides an appealing alternative, being much more versatile as it provides a way to systematically map the many-body problem, with , onto a single-body problem without . In DFT the key variable is the particle density which for a normalized is given by

This relation can be reversed, i.e. for a given ground-state density it is possible, in principle, to calculate the corresponding ground-state wavefunction . In other words, is a unique functional of ,[31]

and consequently the ground-state expectation value of an observable is also a functional of

In particular, the ground-state energy is a functional of

where the contribution of the external potential can be written explicitly in terms of the ground-state density

More generally, the contribution of the external potential can be written explicitly in terms of the density ,

The functionals and are called universal functionals, while is called a non-universal functional, as it depends on the system under study. Having specified a system, i.e., having specified , one then has to minimize the functional

with respect to , assuming one has got reliable expressions for and . A successful minimization of the energy functional will yield the ground-state density and thus all other ground-state observables.

The variational problems of minimizing the energy functional can be solved by applying the Lagrangian method of undetermined multipliers.[32] First, one considers an energy functional that doesn't explicitly have an electron-electron interaction energy term,

where denotes the kinetic energy operator and is an external effective potential in which the particles are moving, so that .

Thus, one can solve the so-called Kohn–Sham equations of this auxiliary non-interacting system,

which yields the orbitals that reproduce the density of the original many-body system

The effective single-particle potential can be written in more detail as

where the second term denotes the so-called Hartree term describing the electron-electron Coulomb repulsion, while the last term is called the exchange-correlation potential. Here, includes all the many-particle interactions. Since the Hartree term and depend on , which depends on the , which in turn depend on , the problem of solving the Kohn–Sham equation has to be done in a self-consistent (i.e., iterative) way. Usually one starts with an initial guess for , then calculates the corresponding and solves the Kohn–Sham equations for the . From these one calculates a new density and starts again. This procedure is then repeated until convergence is reached. A non-iterative approximate formulation called Harris functional DFT is an alternative approach to this.

NOTE: The one-to-one correspondence between electron density and single-particle potential is not so smooth. It contains kinds of non-analytic structure. contains kinds of singularities. This may indicate a limitation of our hope for representing exchange-correlation functional in a simple form.

Approximations (exchange-correlation functionals)

[edit]The major problem with DFT is that the exact functionals for exchange and correlation are not known except for the free electron gas. However, approximations exist which permit the calculation of certain physical quantities quite accurately. In physics the most widely used approximation is the local-density approximation (LDA), where the functional depends only on the density at the coordinate where the functional is evaluated:

The local spin-density approximation (LSDA) is a straightforward generalization of the LDA to include electron spin:

Highly accurate formulae for the exchange-correlation energy density have been constructed from quantum Monte Carlo simulations of jellium.[33]

Generalized gradient approximations (GGA) are still local but also take into account the gradient of the density at the same coordinate:

Using the latter (GGA) very good results for molecular geometries and ground-state energies have been achieved.

Potentially more accurate than the GGA functionals are the meta-GGA functionals, a natural development after the GGA (generalized gradient approximation). Meta-GGA DFT functional in its original form includes the second derivative of the electron density (the Laplacian) whereas GGA includes only the density and its first derivative in the exchange-correlation potential.

Functionals of this type are, for example, TPSS and the Minnesota Functionals. These functionals include a further term in the expansion, depending on the density, the gradient of the density and the Laplacian (second derivative) of the density.

Difficulties in expressing the exchange part of the energy can be relieved by including a component of the exact exchange energy calculated from Hartree–Fock theory. Functionals of this type are known as hybrid functionals.

Hohenberg–Kohn theorems

[edit]1.If two systems of electrons, one trapped in a potential and the other in , have the same ground-state density then necessarily .

Corollary: the ground state density uniquely determines the potential and thus all properties of the system, including the many-body wave function. In particular, the "HK" functional, defined as is a universal functional of the density (not depending explicitly on the external potential).

2. For any positive integer and potential it exists a density functional such that obtains its minimal value at the ground-state density of electrons in the potential . The minimal value of is then the ground state energy of this system.

Pseudo-potentials

[edit]The many electron Schrödinger equation can be very much simplified if electrons are divided in two groups: valence electrons and inner core electrons. The electrons in the inner shells are strongly bound and do not play a significant role in the chemical binding of atoms; they also partially screen the nucleus, thus forming with the nucleus an almost inert core. Binding properties are almost completely due to the valence electrons, especially in metals and semiconductors. This separation suggests that inner electrons can be ignored in a large number of cases, thereby reducing the atom to an ionic core that interacts with the valence electrons. The use of an effective interaction, a pseudopotential, that approximates the potential felt by the valence electrons, was first proposed by Fermi in 1934 and Hellmann in 1935. In spite of the simplification pseudo-potentials introduce in calculations, they remained forgotten until the late 50's.

Ab initio Pseudo-potentials

A crucial step toward more realistic pseudo-potentials was given by Topp and Hopfield and more recently Cronin, who suggested that the pseudo-potential should be adjusted such that they describe the valence charge density accurately. Based on that idea, modern pseudo-potentials are obtained inverting the free atom Schrödinger equation for a given reference electronic configuration and forcing the pseudo wave-functions to coincide with the true valence wave functions beyond a certain distance . The pseudo wave-functions are also forced to have the same norm as the true valence wave-functions and can be written as

where is the radial part of the wavefunction with angular momentum , and and denote, respectively, the pseudo wave-function and the true (all-electron) wave-function. The index n in the true wave-functions denotes the valence level. The distance beyond which the true and the pseudo wave-functions are equal, , is also -dependent.

- ^ (Parr & Yang 1989, p. 246, Eq. A.2).

- ^ (Parr & Yang 1989, p. 246, Eq. A.1).

- ^ a b (Parr & Yang 1989, p. 246).

- ^ (Parr & Yang 1989, p. 247, Eq. A.3).

- ^ (Parr & Yang 1989, p. 247, Eq. A.4).

- ^ (Greiner & Reinhardt 1996, p. 38, Eq. 7).

- ^ (Parr & Yang 1989, p. 251, Eq. A.34).

- ^ (Parr & Yang 1989, p. 247, Eq. A.6).

- ^ (Parr & Yang 1989, p. 248, Eq. A.11).

- ^ (Parr & Yang 1989, p. 247, Eq. A.9).

- ^ (Giaquinta & Hildebrandt 1996, p. 18)

- ^ (Gelfand & Fomin 2000, p. 28)

- ^ (Parr & Yang 1989, p. 47)

- ^ March, N. H. (1992). Electron Density Theory of Atoms and Molecules. Academic Press. p. 24. ISBN 0-12-470525-1.

- ^ March 1992, p.24

- ^ Parr and Yang 1989, p.47

- ^ March 1983, p. 5, Eq. 11

- ^ March 1983, p. 6, Eq. 15

- ^ Teller, E. (1962). "On the Stability of molecules in the Thomas–Fermi theory". Rev. Mod. Phys. 34 (4): 627–631. Bibcode:1962RvMP...34..627T. doi:10.1103/RevModPhys.34.627.

- ^ Balàzs, N. (1967). "Formation of stable molecules within the statistical theory of atoms". Phys. Rev. 156 (1): 42–47. Bibcode:1967PhRv..156...42B. doi:10.1103/PhysRev.156.42.

- ^ Lieb, Elliott H.; Simon, Barry (1977). "The Thomas–Fermi theory of atoms, molecules and solids". Adv. In Math. 23 (1): 22–116. doi:10.1016/0001-8708(77)90108-6.

{{cite journal}}: CS1 maint: date and year (link) - ^ Parr and Yang 1989, pp.114–115

- ^ Parr and Yang 1989, p.127

- ^ Weizsäcker, C. F. v. (1935). "Zur Theorie der Kernmassen". Zeitschrift für Physik. 96 (7–8): 431–58. Bibcode:1935ZPhy...96..431W. doi:10.1007/BF01337700.

- ^ http://www.tcm.phy.cam.ac.uk/~pdh1001/thesis/node14.html

- ^ Hinchliffe, Alan (2000). Modelling Molecular Structures (2nd ed.). Baffins Lane, Chichester, West Sussex PO19 1UD, England: John Wiley & Sons Ltd. p. 186. ISBN 0-471-48993-X.

{{cite book}}: CS1 maint: location (link) - ^ a b Szabo, A.; Ostlund, N. S. (1996). Modern Quantum Chemistry. Mineola, New York: Dover Publishing. ISBN 0-486-69186-1.

- ^ a b c Levine, Ira N. (1991). Quantum Chemistry (4th ed.). Englewood Cliffs, New Jersey: Prentice Hall. p. 403. ISBN 0-205-12770-3.

- ^ Callaway, J. (1974). Quantum Theory of the Solid State. New York: Academic Press. ISBN 9780121552039.

- ^ Levine, I.N. (1991) Quantum Chemistry (4th ed., Prentice-Hall), p.403

- ^ Cite error: The named reference

Hohenberg1964was invoked but never defined (see the help page). - ^ Kohn, W.; Sham, L. J. (1965). "Self-consistent equations including exchange and correlation effects". Physical Review. 140 (4A): A1133–A1138. Bibcode:1965PhRv..140.1133K. doi:10.1103/PhysRev.140.A1133.

- ^ John P. Perdew, Adrienn Ruzsinszky, Jianmin Tao, Viktor N. Staroverov, Gustavo Scuseria and Gábor I. Csonka (2005). "Prescriptions for the design and selection of density functional approximations: More constraint satisfaction with fewer fits". Journal of Chemical Physics. 123 (6): 062201. Bibcode:2005JChPh.123f2201P. doi:10.1063/1.1904565. PMID 16122287.

{{cite journal}}: CS1 maint: multiple names: authors list (link)

![{\displaystyle f\mapsto I[f]=\int _{\Omega }H(f(x),f'(x),\ldots )\;\mu ({\mbox{d}}x)}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/de0782daea9f380773ccc0ab7943ed653494d050)

![{\displaystyle F(y)={\frac {\int _{x_{0}}^{x_{1}}y(x)\;\mathrm {d} x}{\int _{x_{0}}^{x_{1}}(1+[y(x)]^{2})\;\mathrm {d} x}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/ea9d2c4b279d2506fa57e56da046781492b83b4d)

![{\displaystyle {\begin{aligned}\int {\frac {\delta F}{\delta \rho (x)}}\phi (x)dx&=\lim _{\varepsilon \to 0}{\frac {F[\rho +\varepsilon \phi ]-F[\rho ]}{\varepsilon }}\\&=\left[{\frac {d}{d\epsilon }}F[\rho +\epsilon \phi ]\right]_{\epsilon =0},\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/024c5af5e0e48b8d958023927407d6b73afe1117)

![{\displaystyle \displaystyle {\frac {\delta F[f(\rho )]}{\delta \rho (x)}}={\frac {\delta F[f(\rho )]}{\delta f(\rho (x))}}\ {\frac {df(\rho (x))}{d\rho (x)}}\ ,}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/0792e75275e3d142a80b746e2694ea85f1621096)

![{\displaystyle {\frac {\delta f(F[\rho ])}{\delta \rho (x)}}={\frac {df(F[\rho ])}{dF[\rho ]}}\ {\frac {\delta F[\rho ]}{\delta \rho (x)}}\,.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/fd5fa9e7db49e1b1f185c5792b78813a87d0f82b)

![{\displaystyle F[\rho ]=\int f({\boldsymbol {r}},\rho ({\boldsymbol {r}}),\nabla \rho ({\boldsymbol {r}}))\,d{\boldsymbol {r}},}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/984500e18fa20fadb9f03147b92b046a166aafc7)

![{\displaystyle {\begin{aligned}\int {\frac {\delta F}{\delta \rho ({\boldsymbol {r}})}}\,\phi ({\boldsymbol {r}})\,d{\boldsymbol {r}}&=\left[{\frac {d}{d\varepsilon }}\int f({\boldsymbol {r}},\rho +\varepsilon \phi ,\nabla \rho +\varepsilon \nabla \phi )\,d{\boldsymbol {r}}\right]_{\varepsilon =0}\\&=\int \left({\frac {\partial f}{\partial \rho }}\,\phi +{\frac {\partial f}{\partial \nabla \rho }}\cdot \nabla \phi \right)d{\boldsymbol {r}}\\&=\int \left[{\frac {\partial f}{\partial \rho }}\,\phi +\nabla \cdot \left({\frac {\partial f}{\partial \nabla \rho }}\,\phi \right)-\left(\nabla \cdot {\frac {\partial f}{\partial \nabla \rho }}\right)\phi \right]d{\boldsymbol {r}}\\&=\int \left[{\frac {\partial f}{\partial \rho }}\,\phi -\left(\nabla \cdot {\frac {\partial f}{\partial \nabla \rho }}\right)\phi \right]d{\boldsymbol {r}}\\&=\int \left({\frac {\partial f}{\partial \rho }}-\nabla \cdot {\frac {\partial f}{\partial \nabla \rho }}\right)\phi ({\boldsymbol {r}})\ d{\boldsymbol {r}}\,.\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/364480cb1cb7bbad967750d4f4c2b2baa061f134)

![{\displaystyle T_{\mathrm {TF} }[\rho ]=C_{\mathrm {F} }\int \rho ^{5/3}(\mathbf {r} )\,d\mathbf {r} \,.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/805e085a9d15321704c17fcea9c5c2f3a1f8924b)

![{\displaystyle V[\rho ]=\int {\frac {\rho ({\boldsymbol {r}})}{|{\boldsymbol {r}}|}}\ d{\boldsymbol {r}}.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2c1593ae52b426aa72244fda7d98ac6aab5a6fd4)

![{\displaystyle {\begin{aligned}\int {\frac {\delta V}{\delta \rho ({\boldsymbol {r}})}}\ \phi ({\boldsymbol {r}})\ d{\boldsymbol {r}}&{}=\left[{\frac {d}{d\varepsilon }}\int {\frac {\rho ({\boldsymbol {r}})+\varepsilon \phi ({\boldsymbol {r}})}{|{\boldsymbol {r}}|}}\ d{\boldsymbol {r}}\right]_{\varepsilon =0}\\&{}=\int {\frac {1}{|{\boldsymbol {r}}|}}\,\phi ({\boldsymbol {r}})\ d{\boldsymbol {r}}\,.\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/c5feda7981e551ab3ef736601c97d676b5c93eeb)

![{\displaystyle J[\rho ]={\frac {1}{2}}\iint {\frac {\rho (\mathbf {r} )\rho (\mathbf {r} ')}{\vert \mathbf {r} -\mathbf {r} '\vert }}\,d\mathbf {r} d\mathbf {r} '\,.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2a6d16f5ade6b1bc0c8c78a03ff33b386221d068)

![{\displaystyle {\begin{aligned}\int {\frac {\delta J}{\delta \rho ({\boldsymbol {r}})}}\phi ({\boldsymbol {r}})d{\boldsymbol {r}}&{}=\left[{\frac {d\ }{d\epsilon }}\,J[\rho +\epsilon \phi ]\right]_{\epsilon =0}\\&{}=\left[{\frac {d\ }{d\epsilon }}\,\left({\frac {1}{2}}\iint {\frac {[\rho ({\boldsymbol {r}})+\epsilon \phi ({\boldsymbol {r}})]\,[\rho ({\boldsymbol {r}}')+\epsilon \phi ({\boldsymbol {r}}')]}{\vert {\boldsymbol {r}}-{\boldsymbol {r}}'\vert }}\,d{\boldsymbol {r}}d{\boldsymbol {r}}'\right)\right]_{\epsilon =0}\\&{}={\frac {1}{2}}\iint {\frac {\rho ({\boldsymbol {r}}')\phi ({\boldsymbol {r}})}{\vert {\boldsymbol {r}}-{\boldsymbol {r}}'\vert }}\,d{\boldsymbol {r}}d{\boldsymbol {r}}'+{\frac {1}{2}}\iint {\frac {\rho ({\boldsymbol {r}})\phi ({\boldsymbol {r}}')}{\vert {\boldsymbol {r}}-{\boldsymbol {r}}'\vert }}\,d{\boldsymbol {r}}d{\boldsymbol {r}}'\\\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/604d9921f18f63a32115a37490f300325c7fafcb)

![{\displaystyle {\frac {\delta ^{2}J[\rho ]}{\delta \rho (\mathbf {r} ')\delta \rho (\mathbf {r} )}}={\frac {\partial }{\partial \rho (\mathbf {r} ')}}\left({\frac {\rho (\mathbf {r} ')}{\vert \mathbf {r} -\mathbf {r} '\vert }}\right)={\frac {1}{\vert \mathbf {r} -\mathbf {r} '\vert }}.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2e5d2633d38d32e3ec5d142181697543d6b17aea)

![{\displaystyle T_{\mathrm {W} }[\rho ]={\frac {1}{8}}\int {\frac {\nabla \rho (\mathbf {r} )\cdot \nabla \rho (\mathbf {r} )}{\rho (\mathbf {r} )}}d\mathbf {r} =\int t_{\mathrm {W} }\ d\mathbf {r} \,,}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/0eafe31ae78208f4c75df2c3147f18c61ed02e29)

![{\displaystyle {\begin{aligned}H[p(x)]=-\sum _{x}p(x)\log p(x)\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/96861966ca600ab501ab12eb13a187562581cff1)

![{\displaystyle {\begin{aligned}\sum _{x}{\frac {\delta H}{\delta p(x)}}\,\phi (x)&{}=\left[{\frac {d}{d\epsilon }}H[p(x)+\epsilon \phi (x)]\right]_{\epsilon =0}\\&{}=\left[-\,{\frac {d}{d\varepsilon }}\sum _{x}\,[p(x)+\varepsilon \phi (x)]\ \log[p(x)+\varepsilon \phi (x)]\right]_{\varepsilon =0}\\&{}=\displaystyle -\sum _{x}\,[1+\log p(x)]\ \phi (x)\,.\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/7a13f6bab84e66aadc5f4d0b41d6af651a2825b7)

![{\displaystyle F[\varphi (x)]=e^{\int \varphi (x)g(x)dx}.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/8cf62da868a4878d3d5c56043e0e7947d1a3789f)

![{\displaystyle {\begin{aligned}{\frac {\delta F[\varphi (x)]}{\delta \varphi (y)}}&{}=\lim _{\varepsilon \to 0}{\frac {F[\varphi (x)+\varepsilon \delta (x-y)]-F[\varphi (x)]}{\varepsilon }}\\&{}=\lim _{\varepsilon \to 0}{\frac {e^{\int (\varphi (x)+\varepsilon \delta (x-y))g(x)dx}-e^{\int \varphi (x)g(x)dx}}{\varepsilon }}\\&{}=e^{\int \varphi (x)g(x)dx}\lim _{\varepsilon \to 0}{\frac {e^{\varepsilon \int \delta (x-y)g(x)dx}-1}{\varepsilon }}\\&{}=e^{\int \varphi (x)g(x)dx}\lim _{\varepsilon \to 0}{\frac {e^{\varepsilon g(y)}-1}{\varepsilon }}\\&{}=e^{\int \varphi (x)g(x)dx}g(y).\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/ec4bb807430d52bf84582815526969c5182132bb)

![{\displaystyle {\frac {\delta F[\varphi (x)]}{\delta \varphi (y)}}=g(y)F[\varphi (x)].}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/e78f30af55466f2e117b3dc25af74e86a2db308c)

![{\displaystyle \rho ({\boldsymbol {r}})=F[\rho ]=\int \rho ({\boldsymbol {r}}')\delta ({\boldsymbol {r}}-{\boldsymbol {r}}')\,d{\boldsymbol {r}}'.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/d4a462a8d7648c751791e624f6bb5abdfa985733)

![{\displaystyle {\begin{aligned}{\frac {\delta \rho ({\boldsymbol {r}})}{\delta \rho ({\boldsymbol {r}}')}}\equiv {\frac {\delta F}{\delta \rho ({\boldsymbol {r}}')}}&={\frac {\partial \ \ }{\partial \rho ({\boldsymbol {r}}')}}\,[\rho ({\boldsymbol {r}}')\delta ({\boldsymbol {r}}-{\boldsymbol {r}}')]=\delta ({\boldsymbol {r}}-{\boldsymbol {r}}').\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2fac02564f0a51b98bd37c343c1db341ea341628)

![{\displaystyle J[f]=\int \limits _{a}^{b}L[\,x,f(x),f\,'(x)\,]\,dx\ ,}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/8d3655b3806dcfd1ca393bc681724c7d89391d02)

![{\displaystyle {\frac {\delta F[\rho (x)]}{\delta \rho (y)}}=\lim _{\varepsilon \to 0}{\frac {F[\rho (x)+\varepsilon \delta (x-y)]-F[\rho (x)]}{\varepsilon }}.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/b01a2033e8c8afc08ec56de9981c0e29042885f6)

![{\displaystyle F[\rho (x)+\varepsilon f(x)]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/b979a338aa9020b6c02e0bcae441aaa605ec2a20)

![{\displaystyle F[\rho (x)+\varepsilon \delta (x-y)]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/659a52b75b432c570f6c2a7415fc5b4334bf3618)

![{\displaystyle {\begin{aligned}t_{TF}[n]=\int {\frac {p^{2}}{2m_{e}}}\ n({\vec {r}})\ F_{\vec {r}}(p)\ dp=n({\vec {r}})\int _{0}^{p_{f}({\vec {r}})}{\frac {p^{2}}{2m_{e}}}\ \ {\frac {4\pi p^{2}}{{\frac {4}{3}}\pi p_{f}^{3}({\vec {r}})}}\ dp=C_{F}\ [n({\vec {r}})]^{5/3}\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/5e20c647d11d5ec4c9c2cabb107a915ea4bbbca8)

![{\displaystyle T_{TF}[n]=C_{F}\int [n({\vec {r}})]^{5/3}\ d^{3}r\ .}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/341c780e5016559d9612fe48c179b1380bc94ab0)

![{\displaystyle {\begin{aligned}E&=T\ +\ U_{eN}\ +\ U_{ee}\\&=C_{F}\int [n({\vec {r}})]^{5/3}\ d^{3}r\ +\int n({\vec {r}})\ V_{N}({\vec {r}})\ d^{3}r\ +\ {\frac {1}{2}}\ e^{2}\int {\frac {n({\vec {r}})\ n({\vec {r}}\,')}{\left\vert {\vec {r}}-{\vec {r}}\,'\right\vert }}\ d^{3}r\ d^{3}r'\\\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/08d59b5a09a34f890d2e42e883d073c7b2751edc)

![{\displaystyle \left[P,H\right]=0}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/645807b104e10f8742b412d50ecb21975d94bc61)

![{\displaystyle {\begin{aligned}\Psi _{n_{1}n_{2}\cdots n_{N}}^{(S)}(x_{1},x_{2},\cdots x_{N})\equiv \langle x_{1}x_{2}\cdots x_{N}|n_{1}n_{2}\cdots n_{N};S\rangle \\[10pt]={\sqrt {\frac {\prod _{j}n_{j}!}{N!}}}\sum _{p}^{N!}\psi _{(p,1)}(x_{1})\psi _{(p,2)}(x_{2})\cdots \psi _{(p,N)}(x_{N})\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/a79ae84c654189cb097c13d69c52ba0e275f6852)

![{\displaystyle {\begin{aligned}\Psi _{n_{1}n_{2}\cdots n_{N}}^{(A)}(x_{1},x_{2},\cdots x_{N})\equiv \langle x_{1}x_{2}\cdots x_{N};A|n_{1}n_{2}\cdots n_{N};A\rangle \\[10pt]={\frac {1}{\sqrt {N!}}}\sum _{p}^{N!}\mathrm {sgn} (p)\psi _{(p,1)}(x_{1})\psi _{(p,2)}(x_{2})\cdots \psi _{(p,N)}(x_{N})\end{aligned}}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2cbbedd6dfb51541c6add4a4077d0573e5bd92ab)

={\hat {H}}^{\text{core}}(1)+\sum _{j=1}^{N/2}[2{\hat {J}}_{j}(1)-{\hat {K}}_{j}(1)]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/6d3e0a1ce3b1b528f799d01f7eda355f71f12e5c)

}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/32f43980de090a8055a1bd5b601945b2316d583f)

![{\displaystyle {\hat {F}}(i)={\hat {h}}(i)+\sum _{j=1}^{n/2}[2{\hat {J}}_{j}(i)-{\hat {K}}_{j}(i)]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/f13a23b0cc5257a793505f833d2984a2606b4ac0)

![{\displaystyle {\hat {H}}\Psi =\left[{\hat {T}}+{\hat {V}}+{\hat {U}}\right]\Psi =\left[\sum _{i}^{N}\left(-{\frac {\hbar ^{2}}{2m_{i}}}\nabla _{i}^{2}\right)+\sum _{i}^{N}V({\vec {r}}_{i})+\sum _{i<j}^{N}U({\vec {r}}_{i},{\vec {r}}_{j})\right]\Psi =E\Psi }](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/a3d9b9f58a687a0d965b1fa0218633c129aae9c6)

![{\displaystyle \,\!\Psi _{0}=\Psi [n_{0}]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/81ebfe9237ee0e530a77c187ae5160242ece6201)

![{\displaystyle O[n_{0}]=\left\langle \Psi [n_{0}]\left|{\hat {O}}\right|\Psi [n_{0}]\right\rangle .}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/48cdc3fe972d03d3ce0afab8a93f9abbcba03f2e)

![{\displaystyle E_{0}=E[n_{0}]=\left\langle \Psi [n_{0}]\left|{\hat {T}}+{\hat {V}}+{\hat {U}}\right|\Psi [n_{0}]\right\rangle }](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/ee9c80c3d10e99d151916e0f534cdfe4e9db383e)

![{\displaystyle \left\langle \Psi [n_{0}]\left|{\hat {V}}\right|\Psi [n_{0}]\right\rangle }](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/b1fb1af3716b88e2314c376f648728212b2ac69a)

![{\displaystyle V[n_{0}]=\int V({\vec {r}})n_{0}({\vec {r}}){\rm {d}}^{3}r.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/db6c917508f971e89917bfc9cf9093867028250f)

![{\displaystyle V[n]=\int V({\vec {r}})n({\vec {r}}){\rm {d}}^{3}r.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2a8915bba20edc71dfe6f0bcefd82c1555cc559a)

![{\displaystyle \,\!T[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/1c682f2f35546fdc5ab3d61f3bde8038cc6a74e1)

![{\displaystyle \,\!U[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/3aa2cc5c16a5adb92877ce9bd1644334e5d494b5)

![{\displaystyle \,\!V[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2740d9d9e31fdb9ae83431a367e1d6b77ef1922c)

![{\displaystyle E[n]=T[n]+U[n]+\int V({\vec {r}})n({\vec {r}}){\rm {d}}^{3}r}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/ed2983cfde112e3b96368068ad9a2e2468751614)

![{\displaystyle \,\!E[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/d9e493cf400fd32298acf14c71617ecc7abf9ac0)

![{\displaystyle E_{s}[n]=\left\langle \Psi _{s}[n]\left|{\hat {T}}+{\hat {V}}_{s}\right|\Psi _{s}[n]\right\rangle }](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/ad2ba3c7b0e9f9505099f41b2e121e7d6e4f2a20)

![{\displaystyle \left[-{\frac {\hbar ^{2}}{2m}}\nabla ^{2}+V_{s}({\vec {r}})\right]\phi _{i}({\vec {r}})=\epsilon _{i}\phi _{i}({\vec {r}})}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/30f066b2d650a907fde567c7c38031594afffa4c)

![{\displaystyle V_{s}({\vec {r}})=V({\vec {r}})+\int {\frac {e^{2}n_{s}({\vec {r}}\,')}{|{\vec {r}}-{\vec {r}}\,'|}}{\rm {d}}^{3}r'+V_{\rm {XC}}[n_{s}({\vec {r}})]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/d51e39aed92d393c8df73e33771211f9b33edc42)

![{\displaystyle E_{s}[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/99db680118c17156d733f9541558869ef398e75b)

![{\displaystyle E_{\rm {XC}}^{\rm {LDA}}[n]=\int \epsilon _{\rm {XC}}(n)n({\vec {r}}){\rm {d}}^{3}r.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/e3df6a13003cd64dcb03aa0658f562abee7d7103)

![{\displaystyle E_{\rm {XC}}^{\rm {LSDA}}[n_{\uparrow },n_{\downarrow }]=\int \epsilon _{\rm {XC}}(n_{\uparrow },n_{\downarrow })n({\vec {r}}){\rm {d}}^{3}r.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/1bfb441fa84db02a1d47e9c18a81d42c8826a2ff)

![{\displaystyle E_{XC}^{\rm {GGA}}[n_{\uparrow },n_{\downarrow }]=\int \epsilon _{XC}(n_{\uparrow },n_{\downarrow },{\vec {\nabla }}n_{\uparrow },{\vec {\nabla }}n_{\downarrow })n({\vec {r}}){\rm {d}}^{3}r.}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/c2e259b6f76fb617c10a4e9337f0d7bfed2b4926)

![{\displaystyle F[n]=T[n]+U[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/7a1cf5994dfb8385d84b2476afe39524390bef4b)

![{\displaystyle F[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/2ffefdbddd63e319908aa7e0825bdf9f50f3e6f5)

![{\displaystyle E_{(v,N)}[n]=F[n]+\int {v({\vec {r}})n({\vec {r}})d^{3}r}}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/0453fa6df09999b88b342e918c5fb972e29d1a2b)

![{\displaystyle E_{(v,N)}[n]}](https://wikimedia.riteme.site/api/rest_v1/media/math/render/svg/541fabeba3cf3b9526872f32873f2c09646d9cee)