Law of effect

The law of effect, or Thorndike's law, is a psychology principle advanced by Edward Thorndike in 1898 on the matter of behavioral conditioning (not then formulated as such) which states that "responses that produce a satisfying effect in a particular situation become more likely to occur again in that situation, and responses that produce a discomforting effect become less likely to occur again in that situation."[1]

This notion is very similar to that of the evolutionary theory, if a certain character trait provides an advantage for reproduction then that trait will persist.[2] The terms "satisfying" and "dissatisfying" appearing in the definition of the law of effect were eventually replaced by the terms "reinforcing" and "punishing," when operant conditioning became known. "Satisfying" and "dissatisfying" conditions are determined behaviorally, and they cannot be accurately predicted, because each animal has a different idea of these two terms than another animal. The new terms, "reinforcing" and "punishing" are used differently in psychology than they are colloquially. Something that reinforces a behavior makes it more likely that that behavior will occur again, and something that punishes a behavior makes it less likely that behavior will occur again.[3]

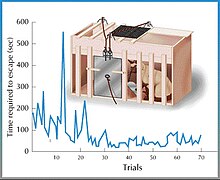

Thorndike's law of effect refutes the ideas George Romanes' book Animal Intelligence, stating that anecdotal evidence is weak and is typically not useful. The book stated that animals, like humans, think things through when dealing with a new environment or situation. Instead, Thorndike hypothesized that animals, to understand their physical environment, must physically interact with it using trial and error, until a successful result is obtained. This is illustrated in his cat experiment, in which a cat is placed in a shuttlebox and eventually learns, by interacting with the environment of the box, how to escape.[4]

History

[edit]This principle, discussed early on by Lloyd Morgan, is usually associated with the connectionism of Edward Thorndike, who said that if an association is followed by a "satisfying state of affairs" it will be strengthened and if it is followed by an "annoying state of affairs" it will be weakened.[5][6]

The modern version of the law of effect is conveyed by the notion of reinforcement as it is found in operant conditioning. The essential idea is that behavior can be modified by its consequences, as Thorndike found in his famous experiments with hungry cats in puzzle boxes. The cat was placed in a box that could be opened if the cat pressed a lever or pulled a loop. Thorndike noted the amount of time it took the cat to free itself on successive trials in the box. He discovered that during the first few trials the cat would respond in many ineffective ways, such as scratching at the door or the ceiling, finally freeing itself with the press or pull by trial-and-error. With each successive trial, it took the cat, on average, less and less time to escape. Thus, in modern terminology, the correct response was reinforced by its consequence, release from the box.[7]

Definition

[edit]

Law of effect is the belief that a pleasing after-effect strengthens the action that produced it.[8]

The law of effect was published by Edward Thorndike in 1905 and states that when an S-R association is established in instrumental conditioning between the instrumental response and the contextual stimuli that are present, the response is reinforced and the S-R association holds the sole responsibility for the occurrence of that behavior. Simply put, this means that once the stimulus and response are associated, the response is likely to occur without the stimulus being present. It holds that responses that produce a satisfying or pleasant state of affairs in a particular situation are more likely to occur again in a similar situation. Conversely, responses that produce a discomforting, annoying or unpleasant effect are less likely to occur again in the situation.

Psychologists have been interested in the factors that are important in behavior change and control since psychology emerged as a discipline. One of the first principles associated with learning and behavior was the Law of Effect, which states that behaviors that lead to satisfying outcomes are likely to be repeated, whereas behaviors that lead to undesired outcomes are less likely to recur.[9]

Thorndike emphasized the importance of the situation in eliciting a response; the cat would not go about making the lever-pressing movement if it was not in the puzzle box but was merely in a place where the response had never been reinforced. The situation involves not just the cat's location but also the stimuli it is exposed to, for example, the hunger and the desire for freedom. The cat recognizes the inside of the box, the bars, and the lever and remembers what it needs to do to produce the correct response. This shows that learning and the law of effect are context-specific.

In an influential paper, R. J. Herrnstein (1970)[10] proposed a quantitative relationship between response rate (B) and reinforcement rate (Rf):

B = k Rf / (Rf0 + Rf)

where k and Rf0 are constants. Herrnstein proposed that this formula, which he derived from the matching law he had observed in studies of concurrent schedules of reinforcement, should be regarded as a quantification of the law of effect. While the qualitative law of effect may be a tautology, this quantitative version is not.

Example

[edit]An example is often portrayed in drug addiction. When a person uses a substance for the first time and receives a positive outcome, they are likely to repeat the behavior due to the reinforcing consequence. Over time, the person's nervous system will also develop a tolerance to the drug. Thus only by increasing dosage of the drug will provide the same satisfaction, making it dangerous for the user.[11]

Thorndike's Law of Effect can be compared to Darwin's theory of natural selection in which successful organisms are more likely to prosper and survive to pass on their genes to the next generation, while the weaker, unsuccessful organisms are gradually replaced and "stamped out". It can be said that the environment selects the "fittest" behavior for a situation, stamping out any unsuccessful behaviors, in the same way it selects the "fittest" individuals of a species. In an experiment that Thorndike conducted, he placed a hungry cat inside a "puzzle box", where the animal could only escape and reach the food once it could operate the latch of the door. At first the cats would scratch and claw in order to find a way out, then by chance / accident, the cat would activate the latch to open the door. On successive trials, the behaviour of the animal would become more habitual, to a point where the animal would operate without hesitation. The occurrence of the favourable outcome, reaching the food source, only strengthens the response that it produces.

Colwill and Rescorla for example made all rats complete the goal of getting food pellets and liquid sucrose in consistent sessions on identical variable-interval schedules.[12]

Influence

[edit]The law of work for psychologist B. F. Skinner almost half a century later on the principles of operant conditioning, "a learning process by which the effect, or consequence, of a response influences the future rate of production of that response."[1] Skinner would later use an updated version of Thorndike's puzzle box, called the operant chamber, or Skinner box, which has contributed immensely to our perception and understanding of the law of effect in modern society and how it relates to operant conditioning. It has allowed a researcher to study the behavior of small organisms in a controlled environment.

An example of Thorndike’s Law of Effect in a child’s behavior could be the child receiving praise and a star sticker for tidying up their toys. The positive reinforcement (praise and sticker) encourages the repetition of the behavior (cleaning up), illustrating the Law of Effect in action.

References

[edit]- ^ a b Gray, Peter. Psychology, Worth, NY. 6th ed. pp 108–109

- ^ Schacter, Gilbert, Wegner. (2011). "Psychology Second Edition" New York: Worth Publishers.

- ^ Mazur, J.E. (2013) "Basic Principles of Operant Conditioning." Learning and Behavior. (7th ed., pp. 101–126). Pearson.

- ^ Mazur, J.E. (2013) "Basic Principles of Operant Conditioning." Learning and Behavior. (7th ed., pp. 101-126). Pearson.

- ^ Thorndike, E. L. (1898, 1911) "Animal Intelligence: an Experimental Study of the Associative Processes in Animals" Psychological Monographs #8

- ^ A. Charles Catania. "Thorndike's Legency: Learning Selection, and the law of effect", p. 425–426. University of Mary Land Baltimore

- ^ Connectionism. Thorndike, Edward.Q Retrieved Dec 10, 2010

- ^ Boring, Edwin`. Science. 1. 77. New York: American Association for the Advancement of Science, 2005. 307. Web.

- ^ "Law of Effect". eNotes.com. Retrieved 2012-08-02.

- ^ Herrnstein, R. J. (1970). On the law of effect. Journal of the Experimental Analysis of Behavior, 13, 243-266.

- ^ Neil, Carlson; et al. (2007). Psychology The Science Of Behaviour. New Jersey, USA: Pearson Education Canada, Inc. p. 516.

- ^ Nevin, John (1999). "Analyzing Thorndike's Law of Effect: The Question of Stimulus - Response Bonds". Journal of the Experiment Analysis of Behaviour. p. 448.